We start with the one-factor case. We will define the concept of factor elsewhere, but for now, we simply view this type of analysis as an extension of the t-tests that are described in Two Sample t-Test with Equal Variances and Two Sample t-Test with Unequal Variances. We begin with an example which is an extension of Example 1 of Two Sample t-Test with Equal Variances.

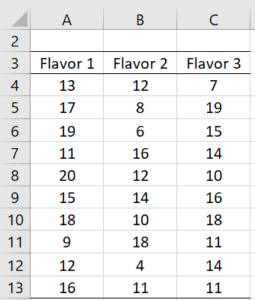

Example 1: A marketing research firm tests the effectiveness of three new flavorings for a leading beverage using a sample of 30 people, divided randomly into three groups of 10 people each. Group 1 tastes flavor 1, group 2 tastes flavor 2 and group 3 tastes flavor 3. Each person is then given a questionnaire that evaluates how enjoyable the beverage was. The scores are as in Figure 1. Determine whether there is a perceived significant difference between the three flavorings.

Figure 1 – Data for Example 1

Our null hypothesis is that any difference between the three flavors is due to chance.

H0: μ1 = μ2 = μ3

We interrupt the analysis of this example to give some background, after which we will resume the analysis.

Definition 1: Suppose we have k samples, which we will call groups (or treatments); these are the columns in our analysis (corresponding to the 3 flavors in the above example). We will use the index j for these. Each group consists of a sample of size nj. The sample elements are the rows in the analysis. We will use the index i for these.

Suppose the jth group sample is

and so the total sample consists of all the elements

We will use the abbreviation x̄j for the mean of the jth group sample (called the group mean) and x̄ for the mean of the total sample (called the total or grand mean).

Let the sum of squares for the jth group be

We now define the following terms:

![]()

![]()

SST is the sum of squares for the total sample, i.e. the sum of the squared deviations from the grand mean. SSW is the sum of squares within the groups, i.e. the sum of the squared means across all groups. SSB is the sum of the squares between-group sample means, i.e. the weighted sum of the squared deviations of the group means from the grand mean.

we also define the following degrees of freedom

Finally, we define the mean square as

Summarizing:

Observation: Clearly MST is the variance for the total sample. MSW is the weighted average of the group sample variances (using the group df as the weights). MSB is the variance for the “between sample” i.e. the variance of {n1x̄1, …, nkx̄k}.

Property 1: If a sample is made as described in Definition 1, with the xij independently and normally distributed and with all σj2 equal, then

Definition 2: Using the terminology from Definition 1, we define the structural model as follows. First, we estimate the group means from the total mean: = μ + aj where aj denotes the effect of the jth group (i.e. the departure of the jth group mean from the total mean). We have a similar estimate for the sample of x̄j = x̄ + aj.

The null hypothesis is now equivalent to

H0: aj = 0 for all j

Similarly, we can represent each element in the sample as xij = μ + αj + εij where εij denotes the error for the ith element in the jth group. As before we have the sample version xij = x̄ + aj + eij where eij is the counterpart to εij in the sample.

Also εij = xij – (μ + αj) = xij – μj and similarly, eij = xij – x̄j.

If all the groups are equal in size, say nj = m for all j, then

i.e. the mean of the group means is the total mean. Also

Observation: Click here for a proof of Property 1, 2 and 3.

Observation: MSB is a measure of the variability of the group means around the total mean. MSW is a measure of the variability of each group around its mean, and, by Property 3, can be considered a measure of the total variability due to error. For this reason, we will sometimes replace MSW, SSW and dfW by MSE, SSE and dfE.

In fact,

![]()

![]() If the null hypothesis is true, then αj = 0, and so

If the null hypothesis is true, then αj = 0, and so

While if the alternative hypothesis is true, then αj ≠ 0, and so

If the null hypothesis is true then MSW and MSB are both measures of the same error and so we should expect F = MSB / MSW to be around 1. If the null hypothesis is false we expect that F > 1 since MSB will estimate the same quantity as MSW plus group effects.

In conclusion, if the null hypothesis is true, and so the population means μj for the k groups are equal, then any variability of the group means around the total mean is due to chance and can also be considered an error.

Thus the null hypothesis becomes equivalent to H0: σB = σW (or in the one-tail test, H0: σB ≤ σW). We can therefore use the F-test (see Two Sample Hypothesis Testing of Variances) to determine whether or not to reject the null hypothesis.

Theorem 1: If a sample is made as described in Definition 1, with the xij independently and normally distributed and with all μj equal and all equal, then

Proof: The result follows from Property 1 and Theorem 1 of F Distribution.

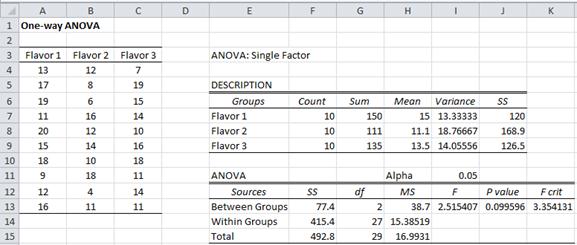

Example 1 (continued): We now resume our analysis of Example 1 by calculating F and testing it as in Theorem 1.

Figure 2 – ANOVA for Example 1

Based on the null hypothesis, the three group means are equal, and as we can see from Figure 2, the group variances are roughly the same. Thus we can apply Theorem 1. To calculate F we first calculate SSB and SSW. Per Definition 1, SSW is the sum of the group SSj (located in cells J7:J9). E.g. SS1 (in cell J7) can be calculated by the formula =DEVSQ(A4:A13). SSW (in cell F14) can therefore be calculated by the formula =SUM(J7:J9).

The formula =DEVSQ(A4:C13) can be used to calculate SST (in cell F15), and then per Property 2, SSB = SST – SSW = 492.8 – 415.4 = 77.4. By Definition 1, dfT = n – 1 = 30 – 1 = 29, dfB = k – 1 = 3 – 1 = 2 and dfW = n – k = 30 – 3 = 27. Each SS value can be divided by the corresponding df value to obtain the MS values in cells H13:H15. F = MSB / MSW = 38.7/15.4 = 2.5. We now test F as we did in Two Sample Hypothesis Testing of Variances, namely:

p-value = F.DIST.RT(F, dfB, dfW) = F.DIST.RT(2.5, 2, 27) = .099596 > .05 = α

Fcrit = F.INV.RT(α, dfB, dfW) = F.INV.RT(.05, 2, 27) = 3.35 > 2.5 = F

Either of these shows that we can’t reject the null hypothesis that all the means are equal.

As explained above, the null hypothesis can be expressed by H0: σB ≤ σW, and so the appropriate F test is a one-tail test, which is exactly what F.DIST.RT and F.INV.RT provide.

We can also calculate SSB as the square of the deviations of the group means where each group mean is weighted by its size. Since all the groups have the same size this can be expressed as =DEVSQ(H7:H9)*F7.

SSB can also be calculated as DEVSQ(G7:G9)/F7. This works as long as all the group means have the same size.

Excel Data Analysis Tool: Excel’s Anova: Single Factor data analysis tool can also be used to perform analysis of variance. We show the output for this tool in Example 2 below.

The Real Statistics Resource Pack also contains a similar data analysis tool that also provides additional information. We show how to use this tool in Example 1 of Confidence Interval for ANOVA.

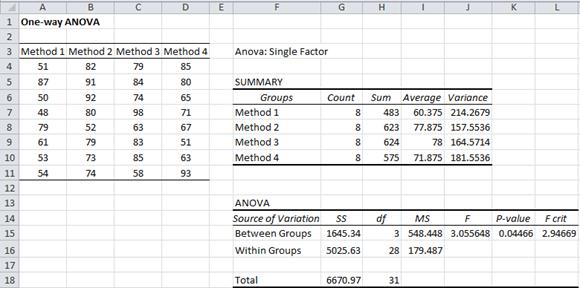

Example 2: A school district uses four different methods of teaching their students how to read and wants to find out if there is any significant difference between the reading scores achieved using the four methods. It creates a sample of 8 students for each of the four methods. The reading scores achieved by the participants in each group are as follows:

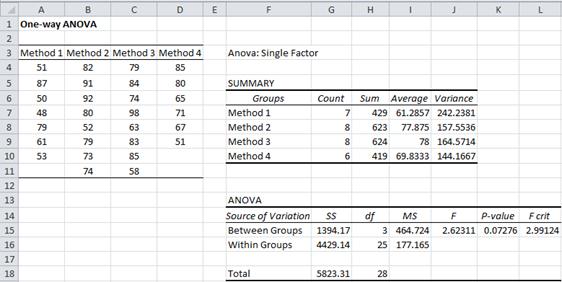

Figure 3 – Anova: Single Factor data analysis tool

This time the p-value = .04466 < .05 = α, and so we reject the null hypothesis, and conclude that there are significant differences between the methods (i.e. all four methods don’t have the same mean).

Note that although the variances are not the same, as we will see shortly, they are close enough to use ANOVA.

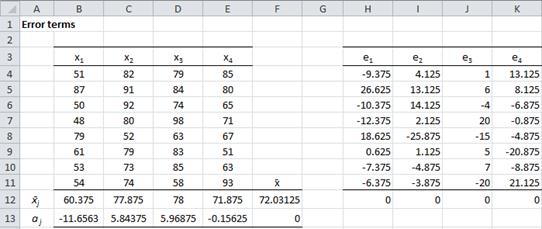

Observation: We next review some of the concepts described in Definition 2 using Example 2.

Figure 4 – Error terms for Example 2

From Figure 4, we see that

- x̄ = total mean = AVERAGE(B4:E11) = 72.03 (cell F12)

- mean of the group means = AVERAGE(B12:E12) = 72.03 = total mean

= 0 (cell F13)

= 0 for all j (cells H12 through K12)

We also observe that Var(e) = VAR(H4:K11) = 162.12, and so by Property 3,

which agrees with the value given in Figure 3.

Observation: In both ANOVA examples, all the group sizes were equal. This doesn’t have to be the case, as we see from the following example.

Example 3: Repeat the analysis for Example 2 where the last participant in group 1 and the last two participants in group 4 leave the study before their reading tests were recorded.

Figure 5 – Data and analysis for Example 3

Using Excel’s data analysis tool we see that p-value = .07276 > .05, and so we cannot reject the null hypothesis and conclude there is no significant difference between the means of the four methods.

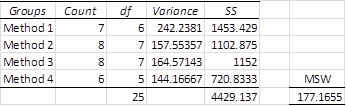

Observation: MSW can also be calculated as a generalized version of Theorem 1 of Two Sample t-Test with Equal Variances. There we had

Generalizing this, we have

From Figure 6, we see that we obtain a value for MSW in Example 3 of 177.1655, which is the same value that we obtained in Figure 5.

Figure 6 – Alternative calculation of MSW

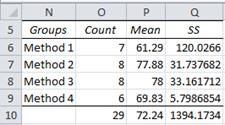

Observation: As we did in Example 1 we can calculate SSB as SSB = SST – SSW. We now show alternative ways of calculating SSB for Example 3.

Figure 7 – Alternative calculation of SSB

We first find the total mean (the value in cell P10 of Figure 7), which can be calculated either as =AVERAGE(A4:D11) from Figure 5 or =SUMPRODUCT(O6:O9,P6:P9)/O10 from Figure 7. We then calculate the square of the deviation of each group mean from the total mean. E.g. for group 1, this value (located in cell Q6) is given by =(P6-P10)^2. Finally, SSB can now be calculated as =SUMPRODUCT(O6:O9,Q6:Q9).

Real Statistics Functions: The Real Statistics Resource Pack contains the following supplemental functions for the data in range R1:

| SSW(R1, b) = SSW | dfW(R1, b) = dfW | MSW(R1, b) = MSW |

| SSBet(R1, b) = SSB | dfBet(R1, b) = dfB | MSBet(R1, b) = MSB |

| SSTot(R1) = SST | dfTot(R1) = dfT | MSTot(R1) = MST |

| ANOVA(R1, b) = F = MSB / MSW | ATEST(R1, b) = p-value |

Here b is an optional argument. When b = True (default) then the columns denote the groups/treatments, while when b = False, the rows denote the groups/treatments. This argument is not relevant for SSTot, dfTot and MSTot (since the result is the same in either case).

These functions ignore any empty or non-numeric cells.

For example, for the data in Example 3, MSW(A4:D11) = 177.165 and ATEST(A4:D11) = 0.07276 (referring to Figure 5).

Real Statistics Data Analysis Tool: As mentioned above, the Real Statistics Resource Pack also contains the Single Factor Anova and Follow-up Tests data analysis tool which is illustrated in Example 1 and 2 of Confidence Interval for ANOVA.

References

Howell, D. C. (2010) Statistical methods for psychology (7th ed.). Wadsworth, Cengage Learning.

https://labs.la.utexas.edu/gilden/files/2016/05/Statistics-Text.pdf

Hi Charles, I have used the ANOVA test to compare the expected values of three datasets. The data sets contain data about the lifespans of three brands of frying oils. I have used Excel to perform the test and I have rejected the null hypothesis on α=0.05. Another task is to explain what portion of the total variance can be explained by the different properties of the individual oil brands. Do I understand the outcome of the Data Analysis plug-in that I should divide the values in the SS columns of rows Between Groups and Total? The results provided by the Data Analysis plug-in is in the image https://i.stack.imgur.com/KZB41.png To be more specific, the result should be 48/126=0.381, i.e. we can explain 38 % of variance by the properties of the individual oil brands?

Thank you, Jiří

Jiří,

It looks like you are doing everything right. I don’t recall having the need to calculate the percentage of variance, but ANOVA is useful to determine whether there is a significant difference between groups. Often after a significant result, you perform a post-hoc test, such as Tukey’s HSD, to more precisely pinpoint the group differences.

Charles

Hi Charles,

I am testing the food quality ordered from 4 different suppliers. I am collecting 10 different food items from each one of them, and tracking the quality of them. Now I have 10 test data for each of the supplier. I picked up the ANOVA – Single Factor.

I want to whether my choice of analysis is correct or not?

Secondly I got P value which is lesser than 0.05, which indicates I can reject the null hypothesis, deciding that there is a significant difference in the quality between 4 suppliers. Now how do i decide which is supplier is better?

Hi Hariharan,

1. If you have selected the 10 food items at random from each supplier and the usual assumptions for ANOVA are met (especially the homogeneity of variances assumption), then ANOVA looks like a good choice.

2. The group with the highest sample mean is probably best, but that doesn’t mean that there is a significant difference between that group and the others. After a significant result for ANOVA, you can perform one of the post-hoc tests to pinpoint which groups are significantly different from the others. Usually, you should perform Tukey’s HSD test.

Charles

Hi Charles, Comparing examples 2 to 3, I noticed a difference in the p value even though the teachers used the same formulae. Does that mean that when calculating for p-value, the COUNTS should be the same for the variables to get best results?

Thanks

It is not just that the counts are different, but some of the data are also different.

You can use ANOVA even when the counts are different.

Charles

When I run a One Factor Anova I get an error for my count, which then gives me errors on basically everything. If the count is wrong, my p-value is wrong, and when I try to fix the error by “including adjacent cells” the count is fixed, but then the p-value becomes an unfixable error. Does anyone know of a solution of this problem?

Alex,

If you email me an Excel file with your data and test results, including the errors, I will try to figure out why you are getting these errors.

Charles

Just sent the email, thank you!

Hi Charles, can one way anova in Microsoft Excel be used in conducting analysis for 4 soil samples at four different depths?

It depends on what hypothesis or hypotheses you want to test.

Charles

Hi Charles,

Could you elaborate a bit more on why E(MS_B) = \sigma^2_{\epsilon} + \sum_j n_j \alpha_j^2 ?

Yupei,

It is a reasonable question, but I didn’t want to get into all the details about this on the website. The following webpage explains things a little further but does not contain the proof.

http://davidmlane.com/hyperstat/B97572.html#:~:text=1%20of%202)-,Expected%20Mean%20Squares%20for%20a%20One%2Dfactor%20between,subject%20ANOVA%20(1%20of%202)&text=Since%20they%20are%20both%20unbiased,the%20null%20hypothesis%20is%20false.

Perhaps the following type of logic will help

https://math.stackexchange.com/questions/3805809/calculating-expected-value-of-mse-for-anova-variance-components

Charles

Hello, i want to know if is possible to use a one way ANOVA to calculate the quantitative analysis results I have, which are in triplicates.

I would need more details about the situation in order to be able to answer your question.

Charles

Hello! I am analyzing the effect of alnus accuminata on soil chemical properties. Can I use single factor ANOVA?

Jo,

Perhaps, but it depends on the details.

Charles

I’m working on a project that compares growing one particular variety of lettuce in soil and in hydroponics. I have 5 plants in both methods of planting. I want to know if using an equal variance t-test will be ok to test for any significance in yield from both methods of planting.

Hello Benny,

Assuming that you have 5 plants using soil and a different 5 plants using hydroponics, you can use an equal variance t test provided:

1. Each sample is approximately normally distributed (e.g. you can test this by using the Shapiro-Wilk test)

2. The variances of the two samples are reasonably similar (if you have any doubts then use the non-equal variance version of the t test).

With such small samples, the power of the t test may not be very high (although this depends on how big a difference is expected between the means of the two samples).

Charles

Thank you very much. You just confirmed what I was thinking of.

Dear Charles,

I’m absolutely new to statistical analyses for more than two groups. Now I have results of a measurement separated by a classification. So some get rating A, some B, some C and some D (A is the best and D the worst, but the levels in between are not the same size). In my case their is not one measurement for class C. So we have three groups: A, B and D. Am I right to use One-way ANOVA respectively Kruskal-Wallis test here?

Sorry, Daniel, but I don’t understand the scenario that you are describing.

Charles

Dear Charles,

thanks for your answer. I’ll try to describe that I have a classification of measured patients in four ratings. Is ANOVA a suitable test to find out, if these people differ regarding their age, duration of illness, …?

Daniel,

It doesn’t seem like a fit for ANOVA. The dependent variable values are not normally distributed (with only 4 possible values).

Charles

Dear Charles,

so I’ll use comparison between the groups pairwise: A vs. B, A vs. D and B vs. D (<- no classifying C in my data). Therefore, statistics as usual for me.

Thanks for your feedback 🙂

Hi, I am making some one way anova tests with Excel. Sometimes I get a F which is greater than F crit, but at the same time the p value is greater than the 0.05 level I set. What does it mean? Do I reject the H0?

Thanks

Hello Eli,

This should not happen. If p-value > alpha then F < F-crit. Charles

I want to see how two factors affect the third factor. How should I proceed?

See ANOVA with More than Two Factors.

Charles

Hi Charles, if I want to see if 3 different factors have any relation to each other based on a group of 138 patients (admission duration, number of medications, and number of medication errors) – is a one-way ANOVA appropriate?

Thank you in advance!

Hello Kelly,

This depends on whether you want to investigate the interactions between the three factors. If so, then you should use Three Factor ANOVA. See

https://real-statistics.com/two-way-anova/anova-more-than-two-factors/

Charles