Property 1:

Proof: The first and last equations are trivial. We now show the second. By the first equation:

Taking the sum of both sides of the equation over all values of i and then squaring both sides, the desired result follows provided

This follows by substituting ŷi by bxi + ai and simplifying.

Property 2:

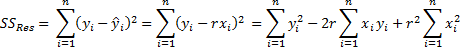

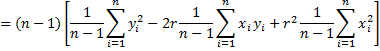

Proof: We give the proof for the case where both x and y have mean 0 and standard deviation 1. The general case follows via some tedious algebra.

In the case where x and y have mean 0 and standard deviation 1, by Theorem 1 of Method of Least Squares and Property 1 of Method of Least Squares, we know that for all i

it follows that

and so n – 1 = SST, which means that

Property 3:

Proof: From Property 2, solving for r2, we have the following by Property 1:

Property 4:

Proof: The first assertion is trivial. The second is a consequence of Properties 1and 2 since

Property 5:

a) The sums of the y values is equal to the sum of the ŷ values; i.e. =

b) The mean of the y values and ŷ values are equal; i.e. ȳ = the mean of the ŷi

c) The sums of the error terms is 0; i.e. = 0

d) The correlation coefficient of x with ŷ is sign(b); i.e. rxŷ = sign(rxy)

e) The correlation coefficient of y with ŷ is the absolute value of the correlation coefficient of x with y; i.e. = |

|

f) The coefficient of determination of y with ŷ is the same as the correlation coefficient of x with y; i.e. =

Proof:

a) By Theorem 1 of Method of Least Squares

b) That the mean of the ŷi is ȳ follows from (a) since the mean of ŷ = ŷi/n =

yi/n = ȳ.

c) This property follows from (a) since

d) First note that by Property 3 of Expectation

Now by Property A of Correlation

![]()

Thus, r = 1 if b > 0 and r = -1 if b < 0. If b = 0, r is undefined since there is division by 0. By Property 1 of Method of Least Squares, r = sign(b) = sign(rxy).

e) Using property (b), the correlation of y with ŷ is

By Theorem 1 of Method of Least Squares

As we saw in the proof of (d),

and so putting all the pieces together, we get

By Property 1 of Method of Least Squares, r = sign(b) = sign(rxy), and so

f) This follows from (e).

Reference

Soch, J. (2023) The book of statistical proofs

https://statproofbook.github.io/

Hi Charles,

I am working with isotope data in tree rings. I simply want to explore whether a linear decline in the isotope time series is significant. I have used a complex, non-intuitive boot strapping procedure in the past but I would prefer to use something more along the lines of simple linear regression model but whenever we do receive somewhat valid critiquies of repeated measures and potential autocorrelation issues. Is there a simple method that I can use to check the concerns or a simple model I can employ?

Hi Roberr,

Without knowing more about the specifics of the two issues that you mentioned, I am unable to provide a definitive answer for how you could use a linear regression model. The following webpage describes various techniques for dealing with autocorrelation

https://real-statistics.com/multiple-regression/autocorrelation/

Charles