Basic Concepts

The Kuder and Richardson Formula 20 test checks the internal consistency of measurements with dichotomous choices. It is equivalent to performing the split-half methodology on all combinations of questions and is applicable when each question is either right or wrong. A correct question scores 1 and an incorrect question scores 0. The test statistic is

where

k = number of questions

pj = number of people in the sample who answered question j correctly

qj = number of people in the sample who didn’t answer question j correctly

σ2 = variance of the total scores of all the people taking the test = VAR.P(R1) where R1 = array containing the total scores of all the people taking the test.

Values range from 0 to 1. A high value indicates reliability, while too high a value (in excess of .90) indicates a homogeneous test (which is usually not desirable).

Kuder-Richardson Formula 20 is equivalent to Cronbach’s alpha for dichotomous data.

Example

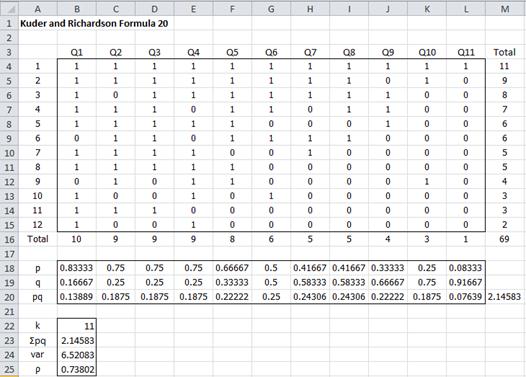

Example 1: A questionnaire with 11 questions is administered to 12 students. The results are listed in the upper portion of Figure 1. Determine the reliability of the questionnaire using Kuder and Richardson Formula 20.

Figure 1 – Kuder and Richardson Formula 20 for Example 1

The values of p in row 18 are the percentage of students who answered that question correctly – e.g. the formula in cell B18 is =B16/COUNT(B4:B15). Similarly, the values of q in row 19 are the percentage of students who answered that question incorrectly – e.g. the formula in cell B19 is =1–B18. The values of pq are simply the product of the p and q values, with the sum given in cell M20.

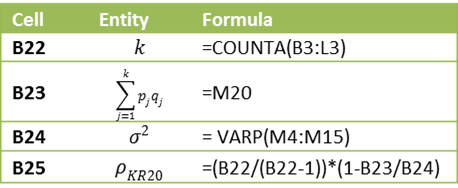

We can calculate ρKR20 as described in Figure 2.

Figure 2 – Key formulas for worksheet in Figure 1

The value ρKR20 = 0.738 shows that the test has high reliability.

Worksheet Function

Real Statistics Function: The Real Statistics Resource Pack provides the following function:

KUDER(R1) = KR20 coefficient for the data in range R1.

For Example 1, KUDER(B4:L15) = .738.

KR-21

Where the questions in a test all have approximately the same difficulty (i.e. the mean score of each question is approximately equal to the mean score of all the questions), then a simplified version of Kuder and Richardson Formula 20 is Kuder and Richardson Formula 21, defined as follows:

where μ is the population mean score (obviously approximated by the observed mean score).

For Example 1, μ = 69/12 = 5.75, and so

Note that ρKR21 typically underestimates the reliability of a test compared to ρKR20.

Examples Workbook

Click here to download the Excel workbook with the examples described on this webpage.

References

Wikipedia (2012) Kuder-Richardson formulas

https://en.wikipedia.org/wiki/Kuder%E2%80%93Richardson_formulas

Do you know how to calculate confidence Intervals for KR-20?

Hello Kendall,

KR-20 is equivalent to Cronbach’s alpha. You can calculate the confidence interval as described at

https://www.real-statistics.com/reliability/internal-consistency-reliability/cronbachs-alpha/cronbachs-alpha-continued/

Charles

If you put all values =1 that formulas gives you #DIV/0! but experiment should be 100% truth. Or if you put there Q1.1=0 and =1 the rest it gives you very low reliability, suppose to be close to 1….

Something wrong here.

Hello Iaro,

Unfortunately, some of these reliability measures give strange results in the extreme cases.

Charles

Hi Charles,

This website is a wonderful resource. Thank you! I have a question that echoes some of the ones posted already, but I just want confirmation. I have an exam form with 40 items. The questions themselves are multiple choice, but no partial credit is awarded. Therefore, my data is binary (dichotomous), where 1 = correct and 0 = incorrect.

Could you please confirm that calculating Crohnbachs’ alpha would render the exact same value as calculating KR20 in this case?

I am using two different tools and the result I get is slightly different (KR20 = 0.72 and Crohnbach’s = 0.714)

Thank you!

Hello Claudia,

The results from KR20 and Cronbach’s alpha should be identical.

Charles

Thank you!

Hola, Charles. Buenas noches.

Podrías responderme lo siguiente, por favor:

Si mi KR-20 es sobre una población, entonces utilizo VAR.P

Si mi KR-20 es sobre una muestra, entonces utilizo VAR.S

¿Eso es verdad?

If I remember correctly, you can use VAR.S or VAR.P and get the exact same result. You must not mix VAR.S with VAR.P, i.e. use VAR.S in one place and VAR.P in another place.

Charles

Hi, I am finishing my paper for my DNP. I am implementing an intervention, which requires education. I created a quiz for pre and post-education. The quiz has select all that apply questions.

I need to rest reliability and validity on a tool I have yet to administer. Thank you.

Erika,

KR20 is one way to measure reliability.

Charles

Hi Charles! I have a 60-item multiple choice test. There is only one answer for each item (no partial scoring). Can I use KR 20 for internal consistency? How many respondents do I need to perform KR 20? This is for research purposes.

Somebody is also telling me about Cronbach’s alpha. If my test were a scale, literature suggests 10 respondents for each item. Is this benchmark also true for achievement test?

One more, I will be administering my test to 5o students for item analysis. After I removed “bad” questions, and already revised some items, can I compute already internal consistency or I need to administer the good items to another group? (That is, if 50 respondents would be enough for KR 20 or Cronbach’s)

Hope to hear from you.

Mark,

1. You can use KR20 to determine internal consistency. Note that in the case where the code for each multiple-choice question is 1 for a correct answer and 0 for an incorrect answer (the code that is commonly used with KR20, KR20 and Cronbach’s alpha are equivalent, i.e. they will have the same value and the same interpretation.

2. There is no clear-cut consensus regarding the sample size. I believe that 10 respondents per item was commonly used, but it is not clear whether this is still the appropriate guideline. The following papers discuss this subject.

https://www.researchgate.net/post/How_much_Should_be_the_Sample_Size_for_Main_Study

https://www.researchgate.net/publication/268809872_Minimum_Sample_Size_for_Cronbach%27s_Coefficient_Alpha_A_Monte_Carlo_Study

3. If you base your sample size on statistical power, then see

https://www.real-statistics.com/reliability/internal-consistency-reliability/cronbachs-alpha/cronbachs-alpha-power/

4. Another approach is to base the sample size on the confidence interval of Cronbach’s alpha. Find the sample size n that provides a lower bound in the Cronbach’s alpha confidence interval that is sufficiently high for your purposes. See

https://www.real-statistics.com/reliability/internal-consistency-reliability/cronbachs-alpha/cronbachs-alpha-continued/

5. After removing or changing questions you should redo the KR20 analysis.

Charles

Hi, Charles!

1. So I really need to do KR 20 again after I removed bad items. Can I not compute KR 20 immediately from the remaining good questions?

2. If I really need to do KR 20 again after removing bad questions, can I administer the good questions to the same group?

Thank you!

Mark,

1. You can compute KR20 from the good questions without administering the test again. If you revise some of the questions then you would need to readminister the test or at least part of the test.

2. Readministering the test to the same group has the downside that the people may have learned something or had been influenced in some way by having taken some of the questions previously.

Charles

Hi Charles!

Bear with me, my questions are not at the same time. I choose difficulty index (p) 0.3 to 0.9. and discrimination index (DI) 0.3 and above. If a question is within these values of p and DI, then I considered it a good item. Am I doing it right? I am bothered by still considering distracter analysis because literature says a non functioning distracter should be chosen by less than 5%. What if p=0.9, then it is expected that other distracters are chosen by less than 5%.

For distracter analysis, can I simply change options that attract 0% ?

Mark,

There are no universally accepted criteria for the difficulty and discrimination indices, but p between .3 and .9 with DI >= .3 may be viwed as acceptable. Sorry, but I don’t understand your question about distracter analysis.

Charles

Hi Charles!

This is in relation to the following:

You can compute KR20 from the good questions without administering the test again. If you revise some of the questions then you would need to readminister the test or at least part of the test.

In case I choose to readminister my test in part. How can I compute internal consistency of the test as a whole when there could be less or more respondents in the 2nd administration?

Thank you.

Mark,

I am not sure how or if you could do this.

Charles

When can we use the KR-20?

To measure the internal consistency of a questionnaire.

Charles

Good day sir. please sir, I need you to help me with the computation of this question. the question goes thus, if the test was to a standardized test with 6000 participants, and those that got each item right was between 3000 and 4500. compute the Kuder Richardson reliability coefficient. Hint: select any convenient number of correct responses for each item in the test.

This sounds like a homework problem. I have a policy of not doing homework assignments. Do you have a specific question that might help you do the assignment?

Charles

Hello have you solved the question. Please kindly reply at ebival4reel@gmail.com

I assume that you are referring to the comment you made in February 2021. Sorry, but I haven’t tried to solve this question since it appeared to be a homework problem. I also don’t completely understand the question. Can you provide any further information?

Charles

Hi Charles,

Thanks for your contribution and support in Stats…

Have a query on internal consistency…..

What analysis can I do to find out internal consistency on a questionnaire which is based on Ranking. Questionnaire has 15 questions for which the response is Rank 1 for most preferred, 2 for moderate and 3 for Least preferred. Of the 15 questions, 5 questions Measures Visual, 5 measures Auditory and 5 measures Kinesthetic learning style. Overall the 15 questions measure learning styles.

Kindly suggest.

Regards

Jose,

How to measure the internal consistency of a survey with rank-order questions?

The only reference I found online was at the following, but I didn’t find it helpful.

https://www.researchgate.net/post/What-statistic-should-be-used-to-analyse-reliability-of-the-ranking-scale-questionnaire

I am sorry, but I don’t have a suitable answer. I guess you could view each question as three separate questions using a 1,2,3 Likert scale for each question, but then I don’t know whether the usual measurements of internal consistency would be useful.

Charles

Pls can you explain further how you got the value of B18?

As written on the webpage, cell B18 contains the formula =B16/COUNT(B4:B15)

Charles

I need Richard kuderson k21 formula reference for my research. writer and year complete referenc. kindly help me

See https://pubmed.ncbi.nlm.nih.gov/18175512/

https://methods.sagepub.com/Reference//the-sage-encyclopedia-of-communication-research-methods/i11995.xml

Generally, KR21 has been replaced by KR20.

Charles

will the result from KR 20 same with split half method using spearman brown? also i got confused when i get r1/2 is negative, how can I put it in spearman formula in denominator? thank you

Sorry, but I don’t understand your question. You don’t need to use Spearman’s formula with KR20.

Charles

I have questions in my questionnaire with option yes and No. Can I use KR-20 test to calculate the Cronbach alpha.

Kalyani,

For data with two values (such as Yes/No or Correct/Incorrect), KR-20 is equivalent to Cronbach’s alpha.

Note that for your situation, you need to decide whether 0 = No and 1 = Yes is the correct coding or 0 = Incorrect and 1 = Correct.

Charles

I do have four choices in my questionnaire. Is KR 20 applicable?

Evelyn,

If you are using a multiple-choice test with four choices, then you can use KR20. In this case, you need to use the coding 1 = a correct answer and 0 = an incorrect answer. If instead, you are using a Likert scale with 4 choices, then no you can’t use KR20; you can use Cronbach’s alpha in this case.

Charles

Goodmorning Professor,

I used the KR20 for 11 items (dichotomous variables). The first five ones belong to a construct, as the other three and three to other two. However, the three constructs belong to a bigger construct (the one that interests the research): should I calculate the KR20 for the three or for the big one? Because in one case I have a good reliability while for the other option not.

thank you for the attention,

Mayra

Mayra,

It really depends on how closely related these concepts are. You should decide which approach to use prior to getting the results since you don’t want your decision to be influenced by the results.

Charles

Mr Charles

I’m not too really clear with the way variance is calculated from the excel. I’ll appreciate if you can throw more clarification. Thanks

Variance can be calculated in Excel by the function VAR.S (old version VAR) or VAR.P (old version VARP). As long as you use the same version in all the places where you need to calculate a variance then you will get the same answer for KR20.

Charle

Hi,

What method will I use when I use the 5 point Likert Scale and how do I do it? Thank you.

Cronbach’s alpha.

Charles

Thank you Charles.. I cannot use Kuder ?

No, you can’t.

Charles

Ooh am Yasinta Alex from Tanzania am doing my first degree at University of Dar es salaam am very interesting on how you interacting each other in solving those problems thank you very much ………I was finding Kuder Richardson formula

Hello Yasinta Alex,

Good to hear from someone from Tanzania.

Charles

Hello,

I have a set of 6 dichotomous variables. This generates 3 components with a sample size of 10,000. I carried out Kuder-Richardson using SPSS and my results are relatively low (0.364, 0.325 and 0.163). I was wondering if there are any other tests that may produce better results.

Thank you.

Lucy,

You shouldn’t try to shop around for better results, but instead try to understand why your results are so low.

See the following webpage for things that you might be able to do. Note that Kuder-Richardson is a special case of Cronbach’s alpha.

https://real-statistics.com/reliability/internal-consistency-reliability/cronbachs-alpha/

Charles

Good day sir,

I am a student that planning to do a KAP study for my final year project. I used excel to calculate the internal consistency of the questionnaire and got a negative value (-0.303). Is there any problem with the calculation or the questionnaire is not valid at all?

You can get a negative value. If you did the calculations correctly, this would mean that either (1) the reliability is very poor or (2) you need to modify the calculations in some way. The second of these issues is fully described on the following webpage

Cronbach’s Alpha

Charles

Hello sir,

My doing a survey on Covid-19. I have 15 questions with dichotomous choices. I need to calculate re Cronbach’s alpha. Could you please help me for the same.

Renuka,

For dichotomous data, Cronbach’s Alpha is equivalent to Kuder and Richardson Formula 20.

Charles

thank you so much sir

Hi Charles, thank you so much for the explanation !

I was wondering about the variance, if my test was administered to a large representative sample of the population. How can I calculate the Variance when I don’t know how many people took the test ? Is it possible to do it ?

Here is more detail about the problem: A test contains 40 dichotomous items (0 = failure, 1 = success). It is administered to a large representative sample of the population. All the item have a difficulty indices p of 0.50 and correlations of 0.40 and the other half have difficulty indices of 0.50 and correlations of 0.50. The total score X of the test is defined by the sum of the scores of the 40 items.

I can’t calculate the reliability of the test because I don’t have the total score variance ?

Thank you !

Hello Mira,

Yes, you would need to know how many people took the test.

Charles

Hi Charles, thank you so much for the explanation !

I was wondering about the variance, if my test was administered to a large representative sample of the population. How can I calculate the Variance when I don’t know how many people took the test ? Is it possible to do it ?

Here is more detail about the problem: A test contains 40 dichotomous items (0 = failure, 1 = success). It is administered to a large representative sample of the population. All the item have a difficulty indices p of 0.50 and correlations of 0.40 and the other half have difficulty indices of 0.50 and correlations of 0.50. The total score X of the test is defined by the sum of the scores of the 40 items.

I can’t calculate the reliability of the test because I don’t have the total score variance ?

Thank you !

Hello Mira,

Yes, you would need to know how many people took the test.

Charles

Hi, I need help to solve this problem, please:

A test contains 20 dichotomous items (0 = failure, 1 = success). It is administered to a large representative sample of the population. Half of the items have difficulty indices p of 0.40 and correlations of 0.30 and the other half have difficulty indices of 0.50 and correlations of 0.50. Furthermore, the correlations between the 10 items in the 1st half and the 10 items in the 2nd half are all zero. The total score X of the test is defined by the sum of the scores of the 20 items.

I have to calculate the reliability of the test.

My question is: How can I calculate the total variance of the test to use in the formula KR20, please ?

Hello Andrea,

I don’t understand what you mean by “Half of the items have … correlations of 0.30” What are these items correlated with?

Regarding the statement “Half of the items have difficulty indices p of 0.40 …”, see

https://real-statistics.com/reliability/item-analysis/item-analysis-basic-concepts/

Charles

Hi,

Thanks a million for all your effort.

I created a questionnaire that have three sections to measure knowledge, attitude and practice of certain disease, respectively. Sec1 and 2 have (yes/no/i don’t know) questions, and Sec3 have (5-likert scale questions). I’m going to calculate cronbach’s alpha but my questions, should i calculate it for each section separately? are they considered as three different concepts? all the questions are related to one specific disease.

kind regards

Islam

Hello Islam,

Without seeing your questionnaire, I am unable to say whether the sections are measuring different concepts, but if they are then you should use three separate measures of the KR20 statistic.

Charles

Thank you for your response

I mean how do we define “concept” in a survey?

Should I do factor analysis to determine the number of constructs?

Is there any possibility I can send the questionnaire to you?

Regards

Islam

Islam,

See Factor Analysis

Charles

Hello!

What reliability testing should be used if you are using correct, incorrect and don’t know in the questionnaire instead of just correct and incorrect?

You can use Cronbach’s Alpha.

Charles

Does Kuder Richardson have the same cut off as Cronbach? (.70) or is it different? If different, do you happen to know what would be considered minimum acceptability? Many thanks

John,

Yes, it is the same. In fact, Kuder Richardson produces the exact same statistic as Cronbach’s alpha.

Charles

I stumbled across your blog (after getting the results from my first exam whereby my scantron office always provides the KR20 score) and instantly became consumed by reading the comments section herein:

In any case, I simply wanted to commend your patience….and tip my hat off to you. 🙂

-Kindly.

Thank you very much, Missy.

Charles

Can I use cronbachs-alpha with test using 1 and 0 choises

Yes, in this case Cronbach’s alpha i equivalent to Kuder and Richardson Formula 20.

Charle

Dear sir I have dichotomous checklist. I need to use KR 20 formula. Can I have vedio of the method.

Sorry, but I don’t have a video of the method.

Charles

Hello,

Thank you for this helpful information. I calculated this statistic for my data and got a value of 0.931. Since this is over 0.90, do I need to be worried or is this still an appropriate statistic to use?

Hello Lindsey,

This shows that your questionnaire is reliable (i.e. internally consistent). You don’t need to worry.

Charles

After realizing I made a calculation error, my actual value is only 0.51.

I have tried deleting questions where there was a large proportion of respondents who missed the question. Theoretically, this should help the value but in my case, while the sum of the p*q terms decreases, so too is my variability.

What suggestions to you have to help the reliability?

Hello Lindsey,

The reliability shouldn’t necessarily improve when you remove questions that subjects got wrong.

The important thing is to make sure that all the questions are measuring the same concept. If you are say measuring two different concepts you should calculate two KR20 values, one for the questions related to concept 1 and another for the questions related to concept 2.

Other such advice is given on the Cronbach’s alpha webpage (note that KR20 is a special case of Cronbach’s alpha). See

Cronbach’s alphaCharles

Hi, Professor:

I have to administer a reading comprehension test that comprises three different skills along with the relevant sub-skills. Should I run KR-20 for each subtest because each measures a different skill? OR I can run the formula on all the skills together. All are MCQs. My second concern is that after calculating KR for each skill (in case I do them separately), is their any requirement to do the total KR value?

Thanks already!

Kaifi

Hello Kaifi,

If each is measuring a different concept, then I suggest that you run KR20 for each separately.

Re a total KR20, it depends on how you will use this measurement. You can use the min of the 3 measurements or the mean, but neither of these is of really much value in my opinion.

Charles

I got it! Thanks a lot! 🌸

how to know the variance please help ??

You use the VAR.S or VAR.P function.

Charles

Nice, its easy on excel but I want to solve it on a paper, please help me to calculate.

how do we know the number of people wether they are correctly answerd or not? what is the parameter? for instance, i have 15 sample participant

I am sorry, but I don-t understand your question.

Charles

Hi, I am using four optional objective test questions with answers lettered A-D, which comprise of 50 question. Which reliability tests is more appropriate?

Hi, if there is one correct answer for each question, then you can code the answers as 1 for correct and 0 for incorrect. In this case it doesn’t matter which specific letter is chosen. You can use any of the test described on the website, including Kuder and Richardson 20, which will be equivalent to Cronbach’s alpha.

Charles

Hi Charles,

Not sure if you are still active here. I am trying to understand the concept of reliability. For example: I ask students a question about their views toward technology before they participate in a new computer skill class and then again two months after they complete the class. Shall I use KR 20 to check the score reliability?

hi .can I use this formula for a vocabulary test that subjects should write the translated answer?

Hello Zahra,

You can use it as described on the webpage as long as there are only two answers for each question or only a correct or incorrect answer.

Charles

If we have a test of 50 items with mean of 30 and standard deviation of 6 ,what will be the reliability of the test using kudar Richardson method ?

Hello Chandrhas sahu,

You need more information than this to calculate the KR20 reliability. This webpage shows how to calculate the reliability based on the raw data.

Charles

Hi, I am currently using a Yes or No Questionnaire with a value of 1-Yes, 2-No using Cronbach alpha on its reliability. I used cronbach alpha but turned out 0.5 as unacceptable. Is KR-20 appropriate tool?

Hello Kenneth,

With yes/no questions, Cronbach’s alpha and KR-20 are equivalent.

If the questions on this questionnaire have correct and incorrect answers, then you shouldn’t code the answers with 1 for yes and 2 for no. Instead you should use 1 for correct and 0 for incorrect.

If there aren’t correct and incorrect responses, then you can use a code of 1 for yes and 0 for no provided there aren’t any reverse coded questions. If there are you need to reverse the coding for these questions. This concept is explained at

Cronbach’s Alpha

Charles

Please, Prof. I want to ask how can I calculate the reliability of pretest and posttest results. Should I calculate for only posttest or pretest and posttest together?

secondly which instrument is good to measure the scores of an artwork? thank you sir.

Hello,

I don’t know what you mean by the reliability of pretest and posttest results. Are you trying to compare the results or something else?

I also don’t know what you mean by measuring the scores of an artwork. Whatsort of scores do you have?

Charles

Dear Prof Charles,

i interest to apply kr20 for my research but i has a few questions.

1) i have true false question but how i need to handle missing value for my case?

2) if I group my false true question to 4 constructs, means it is i need to calculate ks for each construct?

Hope to hear from you soon. Thank you.

1. First, if the questionnaire has True/False questions, you shouldn’t calculate KR20 using 1 for True and 0 for False. Instead, you should use 1 for a correct answer and 0 for an incorrect answer.

Regarding how to handle missing data, first understand that KR20 is really Cronbach’s alpha restricted to dichotomous values (0 or 1). The formula for Cronbach’s alpha is based on a covariance matrix, as described at

https://real-statistics.com/reliability/cronbachs-alpha/

If there are missing data values, you can still calculate the covariance matrix using pairwise covariances. This is perfect for missing data. E.g. you can calculate the covariance matrix using a Real Statistics formula of form COV(R1,FALSE), as described at the following webpage:

https://real-statistics.com/multiple-regression/least-squares-method-multiple-regression/

2. If the 4 constructs are measuring 4 different concepts, then yes you should calculate 4 separate KS20 values.

Charles

Hi Charles, I’ve used KR-21 and my reliability comes out more than 1, which is 1.05, is it valid? or there is problem with my calculation?

Hi,

There is probably an error in your calculation. In any case, you should really use KR-20 instead since KR-21 is only an approximation for KR-20.

If you send me an email with your data, I can check whether you made a mistake in calculating KR-21.

Charles

Is there a recommended number of test participants needed to calculate a valid correlation coefficient using the Kuder Richardson 20?

Hello Debra,

I don’t know of such a recommendation. You can always back into such a value based on the level of accuracy you need. This type of approach is demonstrated for Cronbach’s Alpha on the webpage:

https://real-statistics.com/reliability/cronbachs-alpha/cronbachs-alpha-continued/

Keep in mind that Kuder Richardson 20 is just a special case of Cronbach’s Alpha where all the data values are 0 or 1.

Charles

I need help understanding the relation of sum of p(i)*q(i) with the population variance. Intuitively a large value of V(x) would improve the KR20, as the test results are more spread out. So, I think I understand the denominator. I can see that p(i)*q(i) is an upsidedown parabolic function of p (probability that item is answered correctly), as p*q= p-p^2. If I could understand the meaning of p*q, then I might be able to make sense of how internal consistency of the test is related to the 1-sum(pi*qi)/V function. Please let me know.

Hello David,

I don’t really know since I have not investigated the derivation of the formula. Perhaps someone else in the Real Statistics community has an answer for you.

Charles

Thank you for the explanation. First, sorry my english, but I need to ask you something.

My scale has tree possible answers: Yes, No and Not applicable. Can I use Kuder and Richardson Formula 20?

Juliana,

No, since Kuder accepts only two values, but you can use Cronbach’s Alpha instead.

Charles

Thank you. This was very helpful with my research but if you don’t mind, I repeated the computations with your data and observed that variance I got is different from the one you got. Mine is 7.11 while you got 6.52. Did you use a different method for calculating variance? Secondly, I conducted calculations using a different set of data and got KR20 = -1.17 and KR21 = -1.94. Is this realistic and how does one interpret these results? I also observed that KR21 underestimates KR20. Thank you.

Winigkin Miebofa,

1. You used the sample variance VAR.S and I used the population variance VAR.P. As long as you consistently use one or the other, you will get the same result for KR20.

2. It is possible to get a negative value, but a value such as -1.17 seems unlikely, but I may be wrong about this. If you send me an Excel file with your data, I can check whether this value is correct.

Charles

Hi sir, i have a survey icludes qutions which are mautible choices, 5 strong agree and 1 disagree. In the same survey i have age ( 20-30, 31-41, and over 41, education; secondry, college and university). Also, same survey asking qutions to chose yes or no. Can i put all items in same sheet or i devide them?

Ali,

First, let me answer your question regarding Cronbach’s alpha. KR20 is a special case of Cronbach’s alpha.

You can combine questions with yes/no, multiple choice and likert scale answers, but Cronbach’s alpha should only be used on a group of questions that are testing the same concept. It is likely that in the situation you are describing, multiple concepts are being tested. You should calculate a separate Cronbach’s alpha (or KR20) for each group of questions corresponding to one of the concepts.

Second, KR20 can only be used with questions that dichotomous answers (i.e. with only two possible answers). You can use KR20 with yes/no questions and even multiple choice questions (where KR20 evaluates the answer as 1 for correct and 0 for incorrect). KR20 can’t be used with likert scale questions since there are multiple answers; Cronbach’s alpha, however, can be used with such questions.

Charles

Good day sir. I noticed that you used *n=12* for KR20 but *n=11* for KR21….? n is number of items which is 12.. I dont understand

Fame,

n = 12 is the number of students taking the test, while k = 11 is the number of items.

Charles

GOO DAY SIR I FOUND THIS MATERIAL VERY INFORMATIVE. PLS SIR I NEED CLEARITY FROM YOU. WHEN CALCULATING THE RELIABILITY OF MULTIPLE CHOICE QUESTIONS, AFTERS HAVING YOUR CORRECT ANSWERS LEBEL ‘1’ AND INCORRECT ANSWERS LEBEL ‘O’. AND THE SUM OF THEIR PROPOTIONS. WHAT I AM NOT CLEAR WITH IS THE STANDARD DEVIATION OF THE VARIANCE, WOULD THE STANDARD DEVIATION BE CALCULATED FROM THE TEST QUESTIONS OR FROM THE TOTAL NUMBER OF ‘1’S. FOR EXAMPLE I HAVE TEST SCORES OF PRETEST :46, 40, 56, 67, 76, 48, ……… AND POSTTEST : 60, 70, 65, 78, 54, 90, AM I USING THIS SCORES TO GET MY STANDARD DEVIATION OR THE TOTAL NUMEBER OF’ 1’s. SECONDLY THE STUDY IS AN EXPERIMENTAL STUDY OF PRETEST AND POSTTEST THAT INVOLVES EXPERIMENTAL AND CONTROL GROUP. SHOULD I COMBINE THE SCORES OF EXPERIMENT AND CONTROL GROUP ON PRETEST TO GET THEIR RELIABILTY OR DO THEM SEPERATELY?

Jonah,

You don’t need to calculate the standard deviation to obtain the KR20 reliability measurement. Just use the approach described in Figure 1.

Unless you have reason to believe that your questionnaire will be interpreted differently by the control and treatment groups, I would combine the samples to obtain the reliability measurement.

Charles

Really good explanations about Kuder and Richardson. But, I felt one thing is missing regrading the interpretation of figures. Based on your calculation stated that 0.738 is high reliability. May I know what is range of reliability for Kuder and Richardson to declare high reliability or low reliability.

Letchumanan,

There isn’t uniform agreement as to how to interpret the KR20 value. Note that KR20 is equivalent to Cronbach’s alpha. See the following webpage for more information: https://real-statistics.com/reliability/cronbachs-alpha/

Charles