Basic Concepts

The Kuder and Richardson Formula 20 test checks the internal consistency of measurements with dichotomous choices. It is equivalent to performing the split-half methodology on all combinations of questions and is applicable when each question is either right or wrong. A correct question scores 1 and an incorrect question scores 0. The test statistic is

where

k = number of questions

pj = number of people in the sample who answered question j correctly

qj = number of people in the sample who didn’t answer question j correctly

σ2 = variance of the total scores of all the people taking the test = VAR.P(R1) where R1 = array containing the total scores of all the people taking the test.

Values range from 0 to 1. A high value indicates reliability, while too high a value (in excess of .90) indicates a homogeneous test (which is usually not desirable).

Kuder-Richardson Formula 20 is equivalent to Cronbach’s alpha for dichotomous data.

Example

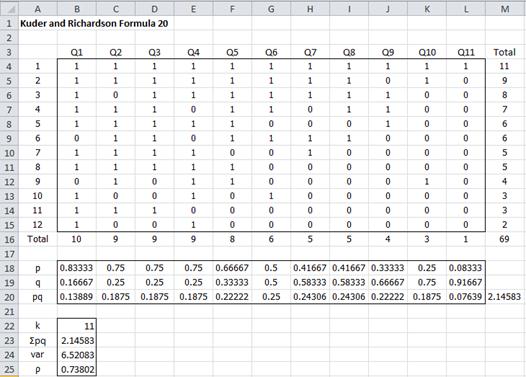

Example 1: A questionnaire with 11 questions is administered to 12 students. The results are listed in the upper portion of Figure 1. Determine the reliability of the questionnaire using Kuder and Richardson Formula 20.

Figure 1 – Kuder and Richardson Formula 20 for Example 1

The values of p in row 18 are the percentage of students who answered that question correctly – e.g. the formula in cell B18 is =B16/COUNT(B4:B15). Similarly, the values of q in row 19 are the percentage of students who answered that question incorrectly – e.g. the formula in cell B19 is =1–B18. The values of pq are simply the product of the p and q values, with the sum given in cell M20.

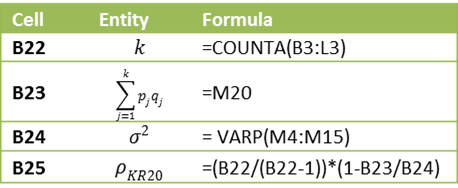

We can calculate ρKR20 as described in Figure 2.

Figure 2 – Key formulas for worksheet in Figure 1

The value ρKR20 = 0.738 shows that the test has high reliability.

Worksheet Function

Real Statistics Function: The Real Statistics Resource Pack provides the following function:

KUDER(R1) = KR20 coefficient for the data in range R1.

For Example 1, KUDER(B4:L15) = .738.

KR-21

Where the questions in a test all have approximately the same difficulty (i.e. the mean score of each question is approximately equal to the mean score of all the questions), then a simplified version of Kuder and Richardson Formula 20 is Kuder and Richardson Formula 21, defined as follows:

where μ is the population mean score (obviously approximated by the observed mean score).

For Example 1, μ = 69/12 = 5.75, and so

Note that ρKR21 typically underestimates the reliability of a test compared to ρKR20.

Examples Workbook

Click here to download the Excel workbook with the examples described on this webpage.

References

Wikipedia (2012) Kuder-Richardson formulas

https://en.wikipedia.org/wiki/Kuder%E2%80%93Richardson_formulas

Good day, sir,

Not sure if you are still active on this page or not, but you seem the expert on the matter. I am having an issue with KR20 (am using alpha on SPSS but I have read that is how it works). My scale is like a school test which consists of 36 True/False items. In 15/36 of the items, True is the correct answer, and in the rest, False is the correct answer. So I reversed the questions accordingly so that those who answer False usually get 1 point, and those who answer True in the 15 reversed questions get 1 point, and thus the higher score (more correct choices) gives an individual more points. When you enter reversed items along with the non-reversed items for a non-dichotomous scale (as you should), the internal reliability of the scale usually increases. If not, it remains in the same ball park.

When I enter the items for my scale this way in SPSS, the reliability is completely thrown off. Alpha is .84 when entered as they originally appear in the dataset, and is .44 when they are entered with the original items + reversed items (without the original items that were reversed, of course). There seems to be no error in computing the new reversed variables. Do you recommend running a different test for the internal reliability of the scale? Or is it recommended to run the items in their original form? It appears that KR20, although having a similar formula, may calculate reliability for reversed items differently. But I am not the expert.

I know this is wordy, but I hope I was clear enough regarding the issue.

All the best,

S. S. Ahmad

S.S. Ahmad,

To simplify things, you should use KR20 based on a coding of 1 for a correct answer and 0 for an incorrect answer. It doesn’t matter if you are using TRUE/FALSE, multiple choice or some other other type of questions.

Charles

Thank you, Charles. Is there any explanation for why the scale is giving a much lower reliability than .82 when I reverse-enter them? Shouldn’t the reliability stay about the same? Or is that not necessary? Is trying a different test of reliability warranted?

S. S. Ahmad

There is no reason that the reliability would be the same when you reverse code, and in any case, there is only one coding that is correct, namely the one I explained in my previous response. Other tests of reliability may give a different result, but generally the result won’t be a lot different. In any case, it is unscientific (and arguably unethical) to shop around for a test that gives a result that you like better.

Charles

Thank you, Charles. You are correct, but since I do not have adequate guidance on the matter, I feel as though I may be doing something wrong. Perhaps this may help. When I check the reliability of the True questions by themselves, and of the False questions by themselves, then I get good reliabilities for both scales (.74 and .75). But when I scale them together, the reliability significantly decreases. One tests accurate knowledge, whereas the other one tests inaccurate knowledge. Perhaps the take-away is that they should remain as two separate scales, since they test separate things? I created the scales with the idea that the items would be opposite, and therefore just a reversed version of the original questions. Perhaps having accurate knowledge and inaccurate knowledge are not the same after all.

If some questions test one concept and other question test a different concept, then yes you should calculate two separate reliability measurements.

Charles

Charles,

When I input data from my class, I received a reliability score of 1.007. would this mean that the test was very reliable?

Brianna,

The higher the better, but the mathematically the value shouldn’t be higher than one.

Charles

Charles,

I am hoping you can help me. I am calculating the KR-20 using excel like you have shown in the sample and my calculated value comes out the same as yours, so I know that my formulas are correct. In some cases when I run with my own data, the KR-20 seems fine. But there are several times when the KR-20 is negative.

For example:

k = 30

sum pq = 3.2805

var.p = 0.2799

KR-20 = -11.0885

I understand mathematically why it calculates as negative, but why does this occur for my data set? I have 30 items on the test and 110 students taking the test.

Any insight would be appreciated.

Debbie,

If you send me an Excel file with your data and analysis I will try to figure out why this is occurring with your data.

Charles

I’m also getting a KR-20 value of -0.42. May you please send me your email so that l could send you my excel spreadsheet with the data.

Dr. Knowles,

My email address can be found at Contact Us.

Charles

I do not understand how we got the value of p,q and variance. help me out.

Mark,

The answers to your question are given just below Figure 1.

Charles

thank u

thanks for this formula.

i am not understanding how to fix up the values. i have 30 true or false questions for 10 respondents. please help me out.

Enter the data as in Figure 1. You should have 30 columns for the questions and 10 rows for the respondents. You should use 1 for a correct answer and 0 for an incorrect answer.

Charles

Dear Charles,

To calculate the 95% confidence interval of the KR20 are the following formulas correct?

=1-((1-KR20)*FINV(0.05/2,df1,df2)), for the lower limit

=1-((1-KR20)*FINV(1-(0.05/2),df1,df2)), for the upper limit

I’m looking forward to hearing for you.

Best regards,

Fernanda.

Fernanda,

Yes, this is correct. These formulas are shown on the following webpage for Cronbach’s alpha:

https://real-statistics.com/reliability/cronbachs-alpha/cronbachs-alpha-continued/

Since KR20 has the same value as Cronbach’s alpha, they are also valid for KR20.

Charles

Thank you for your prompt reply.

Plz tell how do I do further calculation is I have to use liker scale or mean median process…

I don’t completely understand your question, but if you have Likert data, you should use Cronbach’s alpha instead of Kuder-Richardson. See

Cronbach’s Alpha

Charles

I’m using questionnaire in which 50 question are there on global warming awareness. I have taken sample of 100 students of 2 different school in which 50 samples are of boyz nd 50 for girls, questions are of simple yes no typ, now the topic is to calculate and compare the awareness among both the groups means boyz nd girls who among are higher aware on the topic

Hello sir I want help in my research questionnaire on global warming containing 50 question on 100 samples in which 50 boyz 50 girls.questionnaire has dichotomous quest of yes or no typ in which 30 are positive nd 20 negative..Plz tell nxt process of calculation how it is calculated on xcl sheet

Anjali,

The process is explained on the webpage. You should code positively worded questions as Yes = 1 and No = 0, while negatively worded questions as Yes = 0 and No = 1.

Charles

hi, kindly let me know under what conditions kuder Richardson formula and Cronbach formula be used

Ruth,

The main requirement is that the test is measuring only one concept. Also if there are too few questions the value may not be very accurate. See the following article for more details: https://www.ncbi.nlm.nih.gov/pmc/articles/PMC4205511/

Charles

Thank you !

What is the reliability of:

k = 10

£pq = 8.6

Var = 17.2

pKR20 = 0.556

The reliability is 0.556, the pKR20 value.

Charles

Hi! What’s the reliability of this

k 118

pq 9.938271605

var 17.83950617

0.651

Arms,

What does 0.651 represent?

Charles

Kindly explain to me why KR-20 is special than KR-21. Under what circumstances should one use either

Kevin,

You should always use KR-20. KR-21 was created in the era prior to computers since it was easier to calculate. Since KR-20 is more accurate you should use KR-20.

Charles

How to compute standard deviation in Item Analysis. Please help. Thanks

Lourence,

Of what statistic do you want standard deviation in Item Analysis?

Please enter you comments on the following webpage or one of the pages that follows from it:

Item Analysis

Charles

Hi I’m working with this KR20 and switching from 15 to 25 items of “similar” type.

Would I just change the 15/14 to 25/24?

as well as multiply the summation I originally had by 25/15?

Doing that is taking me from a KR20 of .6313 down to .3328….

Daniel,

It doesn’t sound like any of these approaches are correct. Perhaps the approach described on the following webpage would be of assistance to you:

https://real-statistics.com/reliability/split-half-methodology/spearman-browns-predicted-reliability/

Charles

I’ve been given this same formula from my professor but they say the n is the constant of proportionality… frustrating.

So in his explanation in going from 5 to 10 items n would be 2.

but in your example you go from 12 items to 24… but in the new formula you use 24 rather than 2 (which would be doubling it).

So i’m getting conflicting things here.

Also under “example 1” on that page suddenly you’re using a formula that is solving for p not p*. Where did that come from? Just another formula?

Daniel,

The first formula on the webpage

https://real-statistics.com/reliability/split-half-methodology/spearman-browns-predicted-reliability/

shows rho’ in terms of rho. Using algebra, you can solve for rho in terms of rho’. In particular, here rho’ is for n = 12 (which is a known value, namely 0.5) and rho is essentially for n = 1. Once we know the value of rho (namely .0769), we can use the original formula (the first formula on the webpage) and solve for rho’ where n = 24 (to get .667).

Charles

Daniel,

Sorry, I don’t know what your professor has in mind.

Charles

Please help. I used a scrantron machine to scan two versions of the same test. Items equal 50 the KR:20 is .80 on version A, and .13 on version B. Why?

Annie,

I don’t know what a scantron machine is nor what you mean by scanning two versions of the same test.

Why are you using KR20?

Charles

This is quite interesting, Charles. Thanks a lot

I used that formula and got a result of 0.15 which is too low. However, for my questionnaire (seeking respondents’ perceptions) no answer is right or wrong although I awarded 1 for “yes” and 0 for “no”.

Does it mean that the scale is still unreliable?

Robert,

If there are no right or wrong answers, then it doesn’t seem that KR20 would be telling you anything meaningful.

Charles

Is it possible to have a negative coefficient as a result of computing k-r20?

Paul,

I believe that it is possible, although rare. It is clearly not a good result.

Charles

helo sir, can i use K20 if my data is not normally distributed ?

Thank you

Vanni,

KR20 is a measurement. You are not performing any test, and so there is no normality assumption.

Charles

charles,

can i ask for step by step on how to get the standard deviation? pleaseee

Niah,

The formula for the variance is shown in Figure 2. The standard deviation is the square root of the variance.

Charles

hi please help me how to use KR20

Roberto,

The referenced webpage explains how to use KR20. You can also use Cronbach’s alpha, which produces KR20 as well.

Do you have a specific question?

Charles

May I know , if is it correct ?

10/10-1 1-[1-1.6947/7.29

10/9 1-[ -0.6947/7.29

1.11 1-[-0.10]

1.11 (1.1)

= 1.22

Aldin,

I don’t know what these numbers mean. You can use the Real Statistics formula to check your work.

Charles

Charles,

I cannot find the Real Statistics module for the KR-20. It is not an option in the internal consistency directory. Where should I be looking, or does it no longer exist, and computations should be done according to your Example 1? Thanks.

Brian,

You have two choices. (1) Use the KUDER function, as described on the referenced webpage. (2) Use the Cronbach’s Alpha option from the Internal Consistency Reliability data analysis tool. KR-20 is equivalent to Cronbach’s alpha if the data consists only of 0’s and 1’s.

Charles

Thanks!

Dear Charles,

Can KR-20 coefficient be also used in testing internal consistency of an atopic dermatitis questionnaire, wherein a patient is diagnosed with the disease if he fulfills the major criterion (itch) and at least 3/5 minor criteria (onset<2 years old, history of flexural rash, dry skin, history of asthma or hay fever, visible flexural rash)?

Thanks for your help.

Rowena,

I am not familiar with this type of questionnaire, but KR-20 can only be used where each question can only receive a 0 or 1 score (e.g. 0 = wrong answer, 1 = right answer). If this is not the coding that results, you should consider Cronbach’s alpha.

Charles

Sir, I found this very useful for my pilot study.

please is there any excel function to calculate P (B25) as you did to B24.

so I can cross check my calculation.

It is given in Figure 2 of the referenced webpage.

Charles

Hi I just want to ask how did ylu get the variance?

Krizzia,

I used Excel’s VARP function.

Charles

hi

i have read that kr20 and Cronbach’s alpha are mathematically the same. But when I manually computed for kr20, it is way different from the spss result of Cronbach’s Alpha. Please help!

Liza,

This is true provided the data only takes values 0 and 1. If so, I don’t know why the results from SPSS would be different.

Charles

Can you discuss why you used the population versus the sample variance

Mark,

No reason. You can use either and get the same answer.

Charles

Charles,

When I use VAR(M4:M15) the result is 7.1136 versus VARP(M4:M15) of 6.5028. The KR20 is 0.7682 versus 0.7380 using the sample versus the population variance, respectively. Thanks for letting me know what I’m missing.

Mark,

You need to use VARP (or VAR.P). You can use VAR, but then you need to make other changes to the formula. In any case, the result should be identical to that obtained for Cronbach’s alpha.

Charles

Dear Charles,

thank you so much for this helpful website.

I have designed a classification system for medical errors. then I designed fictitious cases and I identified the errors and I asked the raters to classify theses errors based on the newly developed classification system. Can I use Kuder and Richardson Formula 20 and consider the correct classifcation=1 and wrong class=0.

and I am wondering about the number of raters or students required to be able using the Kuder and Richardson Formula 20. I meant, are there any assumptions?

On the other hand, can I use Fliess Kappa as well to measure the inter- rater reliability of the same test?

your help is much appreciated

4-5 raters are sufficient to utilize the KR20?

thanks

Enas,

It is not clear to me why you would use Kuder Richardson 20.

Fleiss’s Kappa could be appropriate (depending on the details).

Charle

Dear Charle,

Thanks for your response. Fleiss Kappa might be appropriate for my data to measure the inter-rater reliability since I will have dichotomous nominal variables. But my concern is about measuring the internal consistency of my classification system which has categories and subcategories. I wanted to convert each category into question and if the rater classified it right (1), wrong (0). I meant each category will be matched with one question. After that, I will have dichotomous variables (nominal) which may suite for Fleiss’s kappa and KR-20. I do not know if I can use the same data to measure both types of reliability (inter-rater reliability and internal consistency)

and my second concern about the sample size required to measure the KR-20.

Thanks Charle,

Enas,

If you are able to view your situation as a questionnaire with many True/False questions where you have the data corresponding to the answers of multiple subjects filling in the questionnaire, then you can use KS20 to measure the internal consistency.

Regarding sample size, first note that KS20 is a special case of Cronbach’s alpha. You can calculate the sample size as described on the following webpage:

https://real-statistics.com/reliability/cronbachs-alpha/cronbachs-alpha-continued/

Charles

Thank you so much, Charles.

Enas

how do you get 6.52802? in your example

Emmanuel,

The answer is shown in cell B24 of Figure 2.

Charles

Hello sir, I applied the kr20 formula for 3 groups of student, ten questions test, 30 students each group. The RO was very different on each group. What could be the reason?

Luis,

I can’t comment without seeing your data. If you send me an Excel file with your data and calculations, I will try to figure out what is going on. You can find my email address at Contact us.

Charles

hello sir Charles can u help me with a link on how to get a more simpler calculation on reliability of research instrument or simple formula with less complex variable

The KR21 formula is simpler and it is described on the referenced webpage.

Charles

Hi Sir can I ask if the Cronbach’s Alpha is also suited in determining the reliability of the pretest I created. The test is a multiple type of test. Thank you.

Kat,

Probably so, but I would need to learn more about the pretest you are conducting.

Charles

Hi sr, what if the computed reliability was 1.099

Maribel,

Reliability should not be larger than 1.

Charles

Prof Charles can u give me the uses of kuder Richardson 20

Bismark,

See the following webpage about Cronbach’s alpha, which is equivalent to Kuder-Richardson for dichotomous data.

Cronbach’s Alpha

Charles

Good day, sir.. if the result is -4.9540, what does it indicate? thank you sir

Joyce,

This indicates that there was an error in the calculation.

Charles

Hi Sir,

I followed the steps above and got a result of 0.77378 on my pilot test… just wanna know if the result means “okay” as in I can conduct it already or do I still need to improve my test questions i,e, I need to get a result of 0.9? or something….

Teresa,

There is not universal agreement on what is a sufficiently good result. To me, .77 seems like a pretty acceptable result.

Charles

hi Charles sir. i do have queries. for a 5 point likert scale, is KR21 to be computed better coz KR20 is to be used with 2 point scale only. also i got .42 reliability index when computed by product moment (upper/lower half & odd even) and o.63 reliabilty index when computed by brown prohecy formula. is this value to be considered good for the scale as i learnt from my friends that r value (range) should be between .7 to .9 and below .7 it is considered as low reliability. remember v r dealing with behavioiural science.

Sajad,

You should always use KS20. KS21 is an estimate of the KS20 value. It was used in the era prior to computers since it was easier to compute manually.

Charles

ks20???i m constructing a scale on school climate(perceived by secondary school students).

Sajad,

What is your question?

Charles

Good day prof.

Pls if am using KR21 for a yes and no question . It it possible I use a slipt half reliability or test retest reliability

Amy,

If you are using KR21, why do you want to use split-half or test-retest? Also, KR20 is more accurate than KR21 and should generally be used instead.

Charles

Hi Charles,

Please I want to ask, can KR21 BE MORE than 1 ? when calculating using the KR21 I found a score of 1.033 ? is there any mistake of calculation? Thank you for answering me 🙂

Yassine

Yassine,

No it shouldn-t be more than 1.

Charles

Hi Charles,

Please can you tell me

Is KR-20 suitable for determining the intra-rater reliability of a screening device that just gives a normal/abnormal outcome?

The device is a tuning fork used in screening for loss of vibration perception and is just being applied to 1 site on the body

I am repeating the test 3 times at the same site in the same session

I am encoding the results to normal = 1, abnormal = 0

Many thanks for your time

David

KR20 is not used for inter-rater reliability.

Charles

I am a Professional counseling student struggling my way through basic statistics. This has been a great help.

Sir Charles,

Is it OK if I encode 1 as favorable answer and 0 as unfavorable answer?

Because our questionnaire would measure only our respondent’s choice.

Thank You.

Seems like a reasonable encoding.

Charles

Dear Prof;

I’m a master student. i develop an instrument with dichotomous questions. I check the reliability by using the KR 20. For the validity side, i’m using the expert review. Is it enough for the examiner to accept my instrument during my viva ? or do you have any suggestions so that the examiner will accept my instrument without any doubt. Need your advise.

It seems like you are covering the bases, but I can’t comment on what any individual might be looking for.

Charles

Hi, Help please

what if if it is negative?

what shall I do?

thanks

J,

If there are only a finite number of outcomes, you can always make all of them non-negative (e.g. by adding the absolute value of the smallest negative number). If you really need negative values, then probably KR20 and Cronbach’s alpha are not the right measures to use.

Charles

What I mean is the result was negative.

What shall I do?

J,

KR20 is only useful if you aren’t testing multiple concepts. Also you need to make sure you don’t have any reverse coding issues (see Cronbach’s Alpha webpage for more details about these sorts of issues).

If you don’t have any of these issues, then a negative result shows that the questionnaire is not internally consistent. You need to work on the wording of your questionnaire.

Charles

Hi, need help

Can I use KR 20 if my questions are seeking only for the demand of a product? in other words there is no correct or wrong answer.

For example:

what toppings would you like to put in your ice cream?

given answers: syrup, sprinkles, marshmallows, etc.

If KR 20 is not applicable with this, what other reliability formula can you suggest?

Thank you very much.

J,

KR20 only accepts a 0 or 1 answer. There doesn’t need to be a correct/incorrect answer.

If you have more choices, you might be able to use Cronbach’s alpha.

Charles

Hi, need help

Can I use KR 20 if my questions are seeking only for the demand of a product? in other words there is no correct or wrong answer.

For example:

what flavors of ice cream do you mostly want to eat?

given answers: vanilla, chocolate, cheese, mango, etc.

If KR 20 is not applicable with this, what other reliability formula can you suggest?

Thank you very much.

Sir, what do you mean by a ‘homogeneous test’?

Dila,

It means that the questions are too much alike.

Charles

Thank you very much sir, this helped me so much.

SIR, how can i get the VARP?

Cherryl,

VARP is a standard Excel function. You can use VAR.P instead (it is equivalent).

Charles

can l use kuder and Richardson to find reliability of a three option multiple choice quetionnaire

Stella,

Yes, provided you use the coding 1 for a correct answer and 0 for an incorrect answer.

Charles

fig2 B25 final step how to calculate kindly explain

The formula is given on the referenced webpage.

Charles