Basic Concepts

The Kuder and Richardson Formula 20 test checks the internal consistency of measurements with dichotomous choices. It is equivalent to performing the split-half methodology on all combinations of questions and is applicable when each question is either right or wrong. A correct question scores 1 and an incorrect question scores 0. The test statistic is

where

k = number of questions

pj = number of people in the sample who answered question j correctly

qj = number of people in the sample who didn’t answer question j correctly

σ2 = variance of the total scores of all the people taking the test = VAR.P(R1) where R1 = array containing the total scores of all the people taking the test.

Values range from 0 to 1. A high value indicates reliability, while too high a value (in excess of .90) indicates a homogeneous test (which is usually not desirable).

Kuder-Richardson Formula 20 is equivalent to Cronbach’s alpha for dichotomous data.

Example

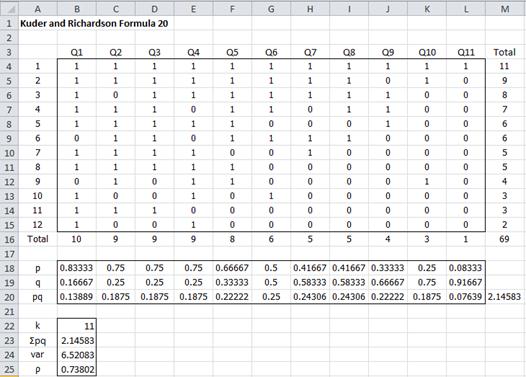

Example 1: A questionnaire with 11 questions is administered to 12 students. The results are listed in the upper portion of Figure 1. Determine the reliability of the questionnaire using Kuder and Richardson Formula 20.

Figure 1 – Kuder and Richardson Formula 20 for Example 1

The values of p in row 18 are the percentage of students who answered that question correctly – e.g. the formula in cell B18 is =B16/COUNT(B4:B15). Similarly, the values of q in row 19 are the percentage of students who answered that question incorrectly – e.g. the formula in cell B19 is =1–B18. The values of pq are simply the product of the p and q values, with the sum given in cell M20.

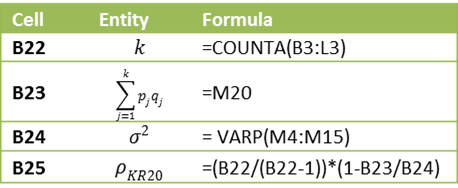

We can calculate ρKR20 as described in Figure 2.

Figure 2 – Key formulas for worksheet in Figure 1

The value ρKR20 = 0.738 shows that the test has high reliability.

Worksheet Function

Real Statistics Function: The Real Statistics Resource Pack provides the following function:

KUDER(R1) = KR20 coefficient for the data in range R1.

For Example 1, KUDER(B4:L15) = .738.

KR-21

Where the questions in a test all have approximately the same difficulty (i.e. the mean score of each question is approximately equal to the mean score of all the questions), then a simplified version of Kuder and Richardson Formula 20 is Kuder and Richardson Formula 21, defined as follows:

where μ is the population mean score (obviously approximated by the observed mean score).

For Example 1, μ = 69/12 = 5.75, and so

Note that ρKR21 typically underestimates the reliability of a test compared to ρKR20.

Examples Workbook

Click here to download the Excel workbook with the examples described on this webpage.

References

Wikipedia (2012) Kuder-Richardson formulas

https://en.wikipedia.org/wiki/Kuder%E2%80%93Richardson_formulas

FIG1 the PQ values how they calculated because both adding coming wrong abswer

Ganesan,

pq is just p multiplied by q. E.g. the formula in cell B20 is =B18*B19.

Charles

Hi Prof Charles,

KR20 can indeed generate negative values, but is it possible to have a negative value of more than 1?

Thank you.

Nazmi,

Once the value is negative, you know that you don’t have internal consistency, and so I have tried to figure out what the lowest negative value could be.

Charles

Yes, negative value indicates that the instrument has a very poor reliability. So, are you saying that it is possible to get a value of >-1? Thank you.

Nazmi,

What I am saying is that it is possible that value can be negative. I haven’t spend the time to check whether it can be less than -1.

Charles

Noted Prof Charles, thank you

Hi Prof. Charles,

I have four questions

(1). Find the difference between KR20 and KR21

(2). At what point do we use KR20?

(3). At what point do we use KR21?

(4). At what point do we use both?

Kaypat,

KR21 is defined at the bottom of the referenced webpage. It was invented as a measure similar to KR20 but easier to calculate by hand. Given that we now have computers you should never use KR21 and use KR20 instead.

Charles

Thanks Charles for your quick response. Please I need you to demystify the question one by one. The explanation you gave is not detailed and not very clear to me. Thanks in anticipation.

Kaypat,

I think that if you will review the information that I have provided you will be able to figure it out.

Charles

Solve it by your self dude…

sir, when is it necessary to apply kuder richardson 20 reliability instrument test and cronbach alpha in test reliability estimate

Rowland,

These are used to measure the internal consistency of a questionnaire.

Charles

Hi,

How do we improve the reliability of a test.

For Ex. if a test has a reliability of 0.5.How do we improve this

1.Should we remove items which are answered by all candidates correctly

2.Should we remove items which are not answered by any candidate correctly

Hi Sandesh,

In general neither of these approaches will do the trick.

More important is to make sure that the questions are all measuring the same concept. If the questions are measuring different concepts then calculate separate KR20 measures for each such groups of questions.

Also note that KR20 is equivalent to Cronbach’s Alpha (for dichotomous questions: correct/incorrect). If you look at the Cronbach’s Alpha webpage you will see that you can evaluate Cronbach’s Alpha if any one question is dropped. This might help you. You should also be careful about poorly worded questions and reverse coded questions (as described on that webpage).

Charles

Thanks Charles for replying.

I completely understand that the items should be worded well. I was more interested to understand how do we manage the reliability at an item level.

For Ex: If an item is being answered correctly by all the candidates , this means the difficulty level of this item is easy. Is there a range of values which can be assigned to each item of different difficulty level(easy,medium,difficult), which will help in managing the overall reliability of the assessment

Sandesh,

It is likely that this is true in the extreme cases (e.g. when almost everyone answer some question correctly or incorrectly), but I doubt that it is true in general. In any case, you can test this yourself by seeing whether KR20 increases when removing such questions.

Charles

Thanks Charles

Dear, i would like to ask about one question .

I can’t understand to calculate true and false question in research for validity and reliability. Please answer to me.

Kuder and Richardson 20 can be used for reliability in such cases.

Charles

Dear Charles,

How about the items not dichotomous types (open ended question)? For example, full marks for the item is 3 and some students get 1 0r 2 or 3 marks. How can we find the reliability??

Alias,

You can use Cronbach’s Alpha. See example using Likert scale on the following webpage.

Cronbach’s Alpha

Charles

Dear Charles

Generally, What is the acceptable value for the Kuder and Richardson?

Azin,

There isn’t agreement on what acceptable values are, but you can find guidelines on the following webpage:

Cronbach’s Alpha.

Keep in mind that Kuder-Richardson is just a special case of Cronbach’s Alpha.

Charles

Dear charles

Thank you for answer, so we can calculate Kuder and Richardson with SPSS Such as Cronbach’s Alpha?

Azin,

You should be able to use SPSS’s Cronbach’s Alpha to calculate Kuder and Richardson.

Charles

Hello Prof,

I would like to know which methodology is appropriate for a meta analysis data reliability for more than 2 variables?

It depends on the nature of the meta analysis data and the type of reliability. Could be Kuder and Richardson Formula 20.

Charles

The meta analysis is measuring the strength wire based on different material properties and sizes.

Good pm. I would like to know which scores should I use in getting the variance of which scores? since we have the pH and pL, right? or should I get the var of all the test scores?

Thanks a lot!

Gin,

I don’t understand your question. There is no pH and pL on the referenced webpage. Please look at Example 1 on the referenced webpage; it should explain how to calculate the KR20 statistic.

Charles

reliability for research instrument that include 25 items is 0.81 (both kuder 20 and cronbach alpha). but 3 items were excluded from the scale for testing inter-item correlation by testing reliability by alpha. this instrument can be used to conduct research.

Sir i am doing PhD and for this i am using self made tool and for this i have to do the survey about the perception of teachers and i am using Likert scale (5 point) to know their views and opinions… Can i use KR20 and Cronbach’s alpha . pls suggest how to calculate reliability if the data is based on perceptions not actually on true or false answers.

Mamta,

KR20 is not appropriate since it only works with dichotomous data, not 5 point Likert scales. You can use Cronbach’s alpha, as described on the webpage Cronbach’s Alpha.

Charles

Thanks Prof. Charles

Hello, need help

Dry run test

Epq =3.62

var =6.46

KR20=.45 what does it mean?

Final test

Epq =7.04

var =16.19

KR20 = .58what does it mean?

it was a 30-item test with 80 respondent?

Susa,

Clearly the final test had a higher level of reliability than the dry run. Most would say that the reliability the dry run test was not that high, while that if the final test was at best borderline.

Charles

Susa,

Clearly the final test had a higher level of reliability than the dry run. Most would say that the reliability the dry run test was not that high, while that of the final test was at best borderline.

Charles

Pls on wat conditions can we use the kuder and Richardson formulae 20

Deborah,

The main condition is that the the items are dichotomous. See the following webpage for more details:

https://www.google.it/webhp?sourceid=chrome-instant&ion=1&espv=2&ie=UTF-8#

Charles

How do we correlate two tests? Our test was about level of affection. And we surfed the net to search for questionnaires that are almost the same with our questionnaire which is the level of affection. So how do we correlate this test? How do we know if are questionnaire is reliable and does have a correlation with the other test? What formula should we use? Our statistics teacher told us to use Kuder and Richardson but I don’t know how to.

I assume that when you say you want to correlate two tests you mean correlate the data. You can use the CORREL function for this.

You can measure reliability using Kuder and Richardson. This is explained on the referenced webpage, assuming that you have the appropriate data.

Charles

Dear Prof. Charles,

I am a master student. I would like to ask regarding reliability test of my instrument. This instrument is measuring adherence to screening, it consists of 2 closed-ended question, and 4 open-ended questions. So the total question is 6 questions. The close-ended question is scored as 1 (if the answer is ever had screening in past year), and 0 (if the answer is never had screening in past year). The open-ended question is scored as 1 (if the answer is met the criteria of adherence: regarding the time and the reason of undergoing screening), and 0 (if the answer is not met this criteria). The full score of 6 is determined as adhere, while score less than 6 is not adhere. I would like to ask you, could i use the internal consistency with KR 20 formula to check the reliability of this instrument? Thank you very much Prof and i am looking forward your kindly advice.

Regards,

Aira

Aira,

If the coding for the open-ended and closed-ended questions are the same, then you could use KR20 on all 6 questions. Otherwise you need to calculate two values for KR20: one for open-ended questions and another one for closed-ended questions.

Charles

Dear Charles, reliability testing for multiple choice questionnaire (a question of one or two or three correct answers) can be used Kuder and Richardson Formula 21? Thanks.

Dear Dung,

Code each question with 1 if the person answers the question correctly and 0 if the person answers the question incorrectly.

Charles

Yes, so the number of patient for reliability testing will increase? For Kuder and Richardson Formula, how many times does the minimum number of patients than the question? Thanks.

Dung,

Sorry, but I don’t understand your first question. To get an idea about the minimum number of subjects required, see the following webpage:

Sample Size for Cronbach’s Alpha

Note that Kruder-Richardson is just a special case of Cronbach’s Alpha.

Charles

Hello Good Day!

Can you give me 1 example of KR Formula 20 and explanation because I don’t have any idea about this. This will be my report in class. Thanks!

This is already provided on the referenced webpage.

Charles

Is the KR-20 appropriate for scales with only 2 or 3 dichotomous items?

Julie,

KR20 is only viable for dichotomous items (i.e. 2 choices only). In this case it is equivalent to Cronbach’s alpha.

Charles

Good morning Charles,

Thanks for your reply.

I did something wrong with my data. Sorry.

May I know how can I explain with KR 20 ?

For instance,

I will use Cronbach’s alpha based on standardized items to explain instead of Cronbach’s Alpha , right ?

The way I will explain when I use Cronbach’s alpha based on standardized items is similar to Cronbach’s Alpha or not? If not, how ?

Please instruct me . I really appreciate your help .

Thank you

Kim,

I don’t know whether did something wrong or not with your data. I simply did not have time to try to interpret the data that you sent me.

Sorry, but I also don’t understand your questions. I receive so many questions from so many people, and don’t really have the time to answer unless the questions are clearly worded.

Charles

Inter-Item Correlation Matrix

V2 V4 V5 V6 V9 V10 V12

V2 1.000 1.000 -.049 .386 .702 -.021 .702

V4 1.000 1.000 -.049 .386 .702 -.021 .702

V5 -.049 -.049 1.000 .214 -.034 .434 -.034

V6 .386 .386 .214 1 .000 .569 -.026 .569

V9 .702 .702 -.034 .569 1.000 -.015 1.000

V10 -.021 -.021 .434 -.026 -.015 1.000 -.015

V12 .702 .702 -.034 .569 1.000 -.015 1.000

Cronbach’s Alpha is 0.723 now , there are 7 items chosen totally now as well ( after running KR20 with 16 items at the first time )

Because item V5 & V10 still have negative value. However, I do want to use it as the final result , can’t I? If not, may u explain why not .

( the reason is I have to make sure the No of these items in this test are equal to the no of items in another one, then I will do correlation for language transfer between 2 Vietnamese & English)

Thanks so much !

res

Sorry Kim, but I don’t completely understand the data or your question.

Charles

I will like to know the conditions that calls for the use of Kuder Richardson 21 and 22 fomular and also Crombac bach Alpha.

Sorry, but I don’t understand what you mean by “the conditions that call for the use of”.

Charles

simply put, what is the difference between Kuder Richardson 21 and 22 formular.

Catherine,

I assume that you mean “what is the difference between Kuder Richardson 21 and 20 formulas?” The KR21 formula is a simplified version of the KR20 formula, which was useful in the days before computers. There is no reason that I can think of for using the KR21 formula. You should use KR20 instead.

Charles

Hi everyone,

I’m doing master of Tesol & thesis now. I got a problem that need your advices.

The answers of questionnaire are wrong or right with 68 participants? Should I use KR20 or Cronbach’ Alpha to do reliability test for pilot study ?

Thanks so much

Hi Kim,

Yes, you can use KR20 and Cronbach’s Alpha to measure reliability. If the coding you use is 0 for wrong and 1 for right, KR20 and Cronbach’s Alpha will yield the exact same answer.

Charles

Why does exam software (like Examsoft) report KR20 on multiple choice exams if the assumptions for the statistic are dichotomous answer choices? Most Examsoft items have at least 4 choices with only 1 correct answer. Is this a relevant statistic in this scenario?

The coding is not based on the 4 choices but on 1 if the answer is correct or 0 if the answer is incorrect (this is dichotomous).

Charles

Many thanks Charles.

Hi Charles,

Just wondering! Which of the methods, Cronbach’s alpha or KR20, is better to investigate the reliability of dichotomous questions?

Thanks in advance.

Luke

Luke,

They should give identical results.

Charles

Dear Charles,

Thanks a million for these excellent explanations. I conducted an online poll for my MBA Thesis. Now I tried to compute the Cronbach’s Alpha. As it turned out the variance in my case is 0 (cell B21 in your example) because it was not possible to give a wrong answer, e.g. all participants answered all questions.

As it is not possible to divide through 0 I get an invalid result for the Alpha. My suggestion is to state that the questionnaire provides a perfect reliability. What would be the best way to interpret this fact or did I misunderstand something?

Thanks in advance,

Marc

Marc,

If there aren’t any wrong answers, it is not clear to me why you even want to use Cronbach’s alpha. I guess in this case you could consider the questionnaire reliable.

Charles

Sir Charle

Can’t I ask you about kuder richard formula 20

what exact meaning about kuder richrd formula 20 thanks

It is a measure of internal consistency of measurements with dichotomous choices. It produces the exact same result as Cronbach’s alpha when all the choices are dichotomous. See Cronbach’s alpha for more information.

Charles

thanks sir charles

Hello Charles,

You are doing a good job. Keep it up. God bless you.

hello…

I have exercises that consist of multiple choice, true-false question and short answear to analyze the scores of this test which is better split-half question or K-20, I tried both I used of split-half Test odd-even and the result unreliable, later I tried to K-20 results are reliable, how come this happened?

test instruments is valid valid but unreliable , why this condition can occur?

pls help me with this..thankyou

It is not too surprising in general that two tests would give different results. This is especially true with small samples.

If you send me an Excel file with your data I can try to understand why you are getting such different results in this case. You can find my email address at Contact Us.

Charles

Thankyou fr yr response,

I already sent you my excel file to your contact, pls be considerable to check it

I have now looked at the spreadsheet that you sent me. The value I calculated for KR20 = .0677, which agrees with the value you calculated. I calculated a value of -.052 for split-half (odd-even). These values are both very low, indicating unreliability.

Charles

hi sir charles.

if i am going to solve it on paper what formula should i use for KR20? because there is so many formula on net and i dont know what to use.. i just need a simple example and process of solving using KR20 for my report, tommorow. and kindly give me another example because your sample is easier to understand than other source on net.. hope that you can help me.. thanks a lot.. God bless.

Hi, the example on the referenced webpage provides all the information you need to calculate KR20. You can also download the Excel spreadsheet for this example from Examples Workbooks so that you can see all the formulas better.

Charles

i got the result of my KR21 is 0.983

what does it mean?

do you have a list to show whether the score has high/medium/low reliability?

it would be very helpful..thank you, Charles.

Adrian,

The result indicates extremely high reliability (almost the maximum value), but this is not necessarily good since a value this high indicates homogeneity of questions (i.e. there isn’t much difference between the questions).

Charles

100 Schüler

14 Fragen

1123 positive Antworten

sd² = 2,1587

KR-21 = -0,0316

Wie interpretiert man negatives Ergebnis von KR-21?

Danke

Josef,

Negative value for KR-21, KR-20 and Cronbach’s Alpha can happen, although in practice they are not so common. These are generally signs that reliability is very very poor.

Charles

Hi,

I’ve tried your formula, but it turns out the KR-20 can be negative if I randomly generate students’ correctness. Can you explain why? According to Wiki, KR-20 range from 0-1, my data is as follow:

Q1 Q2 Q3 Q4 Q5 Q6 Q7 Q8 Q9 Q10 Q11

1 0 0 0 1 0 0 1 1 0 0 0 3

2 0 1 1 0 0 0 1 1 0 0 1 5

3 0 0 1 1 0 0 0 1 1 1 1 6

4 1 1 0 0 0 0 1 1 1 1 0 6

5 1 0 1 1 0 0 0 1 0 0 1 5

6 1 0 0 1 0 1 0 0 1 0 1 5

7 1 1 1 1 0 1 0 0 0 0 1 6

8 0 0 0 0 1 1 1 0 0 0 0 3

9 1 1 1 1 0 0 0 0 0 0 0 4

10 1 0 0 1 0 1 0 0 1 0 0 4

11 1 0 1 1 1 1 0 0 0 0 1 6

12 0 0 0 0 1 0 1 0 0 1 1 4

Total 7 4 6 8 3 5 5 5 4 3 7 57

The simple answer is that KR-20 can indeed be negative. This is not so common in practice but it can happen.

Charles

Hello Dear Charles,

I would like to ask you that can we calculate KR 20 by using SPSS?kind provide some reference.

Thanks

Asad,

Probably so, but I don’t use SPSS.

Charles

is KR-20 just for multiple choice question?

KR-20 is not just for multiple choice questions, but it is just for questions which take only two values (e.g. 1 = correct, 0 = incorrect). This includes True/False questions as well.

Charles

What do I do when I only have a single question which is like this?

Justin,

Cronbach’s alpha can be used even when the questions take a value 1 (say for correct) and 0 (say for incorrect). This would cover True/False and multiple choice questions. The problem is that you can’t use Cronbach’s alpha with only one question. After all, Cronbach’s alpha measures internal consistency, but withonly one question there is no internal consistency to measure. The same is true for Kuder and Richardson.

Charles

Hello Dear Charles,

Kindly give reference of this

There are lots of references for the KR20 test. Most statistic textbooks as well as many websites on the Internet. The original paper is

Kuder, G. F., & Richardson, M. W. (1937). The theory of the estimation of test reliability. Psychometrika, 2(3), 151–160.

Charles

Hello sir. Thank you for the above info. Very helpful indeed. Please help me how you came up with variance or 02 of 6.52083. I just cant figure it out. Thank you sir!

The formula to calculate 6.52083 is shown in Figure 2. I use the VARP function for all the variances. If I had used VAR I would get the same result.

Charles

Pls Charles, can you break it down for me, pls, if possible use figure frm the table.

How did you work VARP(M4:M15)?

Pls i stil don’t understand how the variance was calculated. i.e B24

This is already shown in Figure 2.

Charles

Thanks for your explanation on KR20. Please I calculated the reliability of my research instrument and I got KR20 = 0.65. Can I call this a high reliability? If no, what should be done to the instrument.

Thanks. I will appreciate your quick response please.

Lizzy,

There isn’t complete agreement as to what constitutes “high reliability”, but here are my observations about Cronbach’s alpha. These apply to KR20 as well (since the index produced are identical):

A commonly-accepted rule of thumb is that an alpha of 0.7 (some say 0.6) indicates acceptable reliability, and 0.8 or higher indicates good reliability. Very high reliability (0.95 or higher) is not necessarily desirable, as this indicates that the items may be entirely redundant. These are only guidelines and the actual value of Cronbach’s alpha will depend on many things. E.g. as the number of items increases, Cronbach’s alpha tends to increase too even without any increase in internal consistency.

Charles

Charles, I was wondering if you could look at a screen-shot I have provided and help explain the results to me. I have many different content areas to work on, but in the following example, I have a 10 question dichotomous exam which was given to about 11,300 students. I am using all the results from these exams to find p, which, is quite low. What I do not understand, is that the higher my variance is, the better my p value, or, the lower my Σpq the higher my p.

KR-20 Example

Obviously, I have a bias; and am sure that our questions are reliable, but, the numbers appear to say otherwise. The only other thing I can think of, is that our exam is random. So while we have 10 questions per exam, Q1 may be different than the next student’s Q1, although the question is designed to measure the same knoweldge of the given topic. Do I need to use this formula with a static exam? Where Q1 is the exact same question for all student’s?

Your assistance is much appreciated, Thanks

Tony,

KR20 and Cronbach’s Alpha assume that Q1 is the same for all the students. If this is not the case, then I wouldn’t rely on the KR20 or Cronbach’s alpha measures.

Charles

Thank you Charles. Due to the fact, that we provide randomization w/ our questions, would you be able to provide a plausible approach for measuring the reliability/consistency of our examinations? When we run a t-test; our results indicate a high probability that our results correlate. Aside from Pearson’s R/Spearman (which can change based on how we collect/sort data) is there another method we can use to prove internal consistency?

Sorry Tony, but I don’t know of an approach in this case.

Charles

Question should read “What does it mean?”

We gave the exact same 100 question exam to students in consecutive classes (1000 students in both 2013 and 2014). The calculated KR21 values for each class were equal to within 3 digits (0.8763 vs 0.8765). I assume this tells me something about the student populations. Does does it mean?

Mark,

The results seem to show high internal consistency of the exam. If the 1,000 students are independent then the similar KR21 scores is not surprising.

Charles

Dear Dr. Charles,

First of all I would like to express my gratitude for your precious efforts. This page is a gold mine for people like me.

I wish to use Kuder and Richardson Formula for a number of tests for language learning. Do you think I should use any other formulas or would KR-20 fit for my purposes.

Here are my tests:

Test A: Translation

1- DOG: …………….. (Write the meaning in your first language)

Test B: Multiple Choice

Bow Wow – What animal is this?

a) CAT b)DOG c)…… etc.

Test C: Fill in the blank

A …………. barks.

KR20 only supports dichotomous questions (e.g. 1 for correct answer and 0 for incorrect answer). This seems to be the case with the three questions you are posing, and so KR20 could be used. You can also use Cronbach’s Alpha.

Whether KR20 (or Cronbach’s Alpha) is a fit for your purposes depends of course on what you are trying to do (which is not stated in your comment).

Charles

Thank you Charles, I really appreciate your response. I aim to measure learners existing vocabulary knowledge on some technical engineering vocabulary the above examples have been given to show you my test formats.

Thanks Charles for this information. I do have a question; is it possible to calculate the KR20 using the split-half method? When I attempt, I’m always getting a number higher than 0-1 ..i.e… 1.9, etc.

Jarvis,

I don’t understand what it means to calculate KR20 using the split-half method or why you would want to do so. If you calculate KR20 as described on the referenced webpage you should get a value no higher than 1.

Charles

dear admin,

i just want to ask how to calculate the varices just give me the example

The formulas for the variances is shown in Figure 2 of the referenced webpage. If you download the Examples Workbook you can see all the formulas used for this example (and all the others shown on the website). You can download this file for free at https://real-statistics.com/free-download/real-statistics-examples-workbook/

Charles

good evening.. just wana ask ..how did you get the answer in the example shown above , where VAR is equal to 6.52083.. please. thankyou so much 🙂 🙂

Judy,

The answer is shown in Figure 2 of the referenced webpage. I used the VARP function.

Charles

Hello Charles,

How could I know if the coeficient KR20 is high or low? could you send me a reference from any author?

thanks

Hello Raphaela,

There doesn’t seem to be a common view on this, but I most frequently see a cutoff of .6 or .7. The following call for a cutoff of .60::

http://chitester.wordpress.com/instructor-guide/section-4-reporting-features/test-and-item-analysis/

http://www.umaryland.edu/cits/services/testscoring/umbtestscoring_testanditemanalysis.pdf

Charles

Dear Charles,

I have calculated my KR 21 of my pretest and post test in excel. I found the results are o.3 and 0.5. How do I know if my tests are reliable or not?

Mary,

These are not generally viewed to be very high figures, and so there is doubt about the reliability.

Although the values probably won’tbe that different, I suggest that you use KR20 (or Cronbach’s alpha) which are more accurate.

Charles

hi, thanks, the information was worthy.

What if I want to test the reliability of a self-designed questionnaire to test participant’s knowledge and attitude on some theme/subject like hepatitis, questionnaire has multiple choices however no choice is right.

Salima,

If no choice is right, how do you evaluate the answers given? Please provide more information.

Charles