Basic Concepts

Divergence is a measure of the difference between two probability distributions. Often this is used to determine the difference between a sample and a known probability distribution.

Divergence takes a non-negative value. The value is zero when the two probability distributions are equal.

We consider three measurements of divergence.

Kullback-Leibler Divergence (KL)

Given two finite random variables x and y with corresponding data elements x1, …, xn and y1, …, yn, Kullback-Leibler Divergence takes the form

We assume that when yi = 0, then also xi = 0, in which case we set ln(xi/yi) = 0. Note too that we assume that the elements x1, …, xn and y1, …, yn are the values of a discrete probability distribution, and so each must sum to one. Thus, you may need to replace each xi by

and similarly for the yi.

We can also use the terminology KL(p||q) where p is the pdf whose values are x1, …, xn, and q is the pdf whose values are y1, …, yn. We can view KL(p||q) as representing the information gained by using p instead of q. From a Bayesian perspective, it measures the information gained when revising your prior beliefs from p to the posterior distribution q.

Note that KL(p||q) and KL(q||p) are not necessarily equal. Also, the natural log can be replaced by a log of base 2.

Jenson-Shannon Divergence (JS)

For two finite random variables x and y with corresponding data elements x1, …, xn and y1, …, yn, Jenson-Shannon divergence takes the form

(Credit Scoring) Divergence Statistic

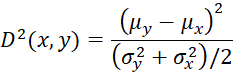

For two finite random variables x and y with corresponding data elements x1, …, xn and y1, …, yn, this divergence statistic takes the form

This metric is used in the credit scoring industry and is essentially the square of the effect size for the two-sample t-test where the two variances are weighted equally.

References

Wikipedia (2023) Divergence (statistics)

https://en.wikipedia.org/wiki/Divergence_(statistics)

Brownlee, J. (2019) How to calculate the KL divergence for machine learning. Machine Learning Mastery

https://machinelearningmastery.com/divergence-between-probability-distributions/

Wikipedia (2023) Kulback-Leibler divergence

https://en.wikipedia.org/wiki/Kullback%E2%80%93Leibler_divergence

Open Risk (2022) Divergence statistic

https://www.openriskmanual.org/wiki/Divergence_Statistic

Zeng, G. (2013) Metric Divergence Measures and Information Value in Credit Scoring

https://www.hindawi.com/journals/jmath/2013/848271/