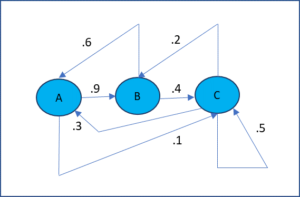

In a Markov chain process, there are a set of states and we progress from one state to another based on a fixed probability. Figure 1 displays a Markov chain with three states. E.g. the probability of transition from state C to state A is .3, from C to B is .2 and from C to C is .5, which sum up to 1 as expected.

Figure 1 – Markov Chain transition diagram

The important characteristic of a Markov chain is that at any stage the next state is only dependent on the current state and not on the previous states; in this sense it is memoryless.

Topics

References

Fewster, R. (2014) Markov chains

https://www.stat.auckland.ac.nz/~fewster/325/notes/ch8.pdf

Pishro-Nik, H. (2014) Discrete-time Markov chains

https://www.probabilitycourse.com/chapter11/11_2_1_introduction.php

Norris, J. (2004) Discrete-time Markov chains

https://www.statslab.cam.ac.uk/~james/Markov/

Lalley, S. (2016) Markov chains

https://galton.uchicago.edu/~lalley/Courses/312/MarkovChains.pdf

Konstantopoulos, T. (2009) Markov chains and random walks

https://www2.math.uu.se/~takis/L/McRw/mcrw.pdf