Introduction

For regression of y on x1, x2, x3, x4, the partial correlation between y and x1 is

This can be calculated as the correlation between the residuals of the regression of y on x2, x3, x4 with the residuals of x1 on x2, x3, x4.

For a time series, the hth order partial autocorrelation is the partial correlation of yi with yi-h, conditional on yi-1,…, yi-h+1, i.e.

The first order partial autocorrelation is therefore the first-order autocorrelation.

Definitions

We can also calculate the partial autocorrelations as in the following alternative definition.

Definition 1: For k > 0, the partial autocorrelation function (PACF) of order k, denoted πk, of a stochastic process, is defined as the kth element in the column vector

Here, Γk is the k × k autocovariance matrix Γk = [vij] where vij = γ|i-j| and δk is the k × 1 column vector δk = [γi]. We also define π0 = 1. We can also define πki to be the ith element in the vector , and so πk = πkk.

Provided γ0 > 0, the partial correlation function of order k is equal to the kth element in the following column matrix divided by γ0.

Here, Σk is the k × k autocorrelation matrix Σk = [ωij] where ωij = ρ|i-j| and τk is the k × 1 column vector τk = [ρi].

Note that if γ0 > 0, then Σk and Γk are invertible for all m.

The partial autocorrelation function (PACF) of order k, denoted pk, of a time series, is defined in a similar manner as the last element in the following matrix divided by r0.

Here Rk is the k × k matrix Rk = [sij] where sij = r|i-j| and Ck is the k × 1 column vector Ck = [ri].

We also define p0 = 1 and pik to be the ith element in the matrix , and so pk = pkk. These values can also be calculated from the autocovariance matrix of the time series in the same manner as described above for stochastic processes.

Observation: Let Y be the k × 1 vector Y = [y1 y2 … yk]T and Z = [y1–µ y2–µ … yk–µ]T where µ = E[Y]. Then cov(Y) = E[ZZT], which is equal to the k × k autocovariance matrix Γk = [vij] where vij = γ|i-j|.

Example

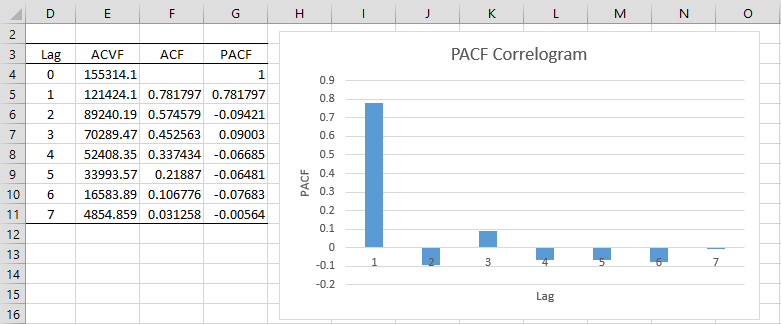

Example 1: Calculate PACF for lags 1 to 7 for Example 2 of Autocorrelation Function.

We show the result in Figure 1.

Figure 1 – PACF

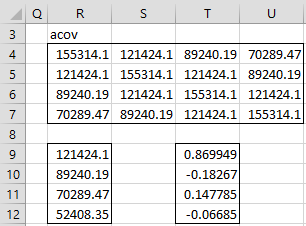

We now show how to calculate PACF(4) in Figure 2. The calculations of the other PACF values is similar.

Figure 2 – Calculation of PACF(4)

First, we note that range R4:U7 of Figure 2 contains the autocovariance matrix with lag 4. This is a symmetric matrix, all of whose values come from range E4:E6 of Figure 1. The values on the main diagonal are s0, the values on the diagonal above and below the main diagonal are s1. The values on the diagonal two units away are s2 and finally, the values in the upper right and lower left corners of the matrix are s3.

Range R9:R12 is identical to range E5:E8. The values in range T9:T12 can now be calculated using the array formula

=MMULT(MINVERSE(R4:U7),R9:R12)

The values in range T9:T12 are the pi4 values, and so we see that PACF(4) = p4 = -.06685 (cell T12).

Observations

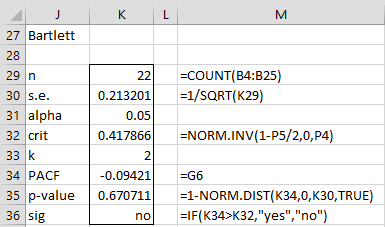

See Correlogram for information about the standard error and confidence intervals of the pk, as well as how to create a PACF correlogram that includes the confidence intervals.

We see from the chart in Figure 1, that the PACF values for lags 2, 3, … are close to zero. As can be seen in Partial Autocorrelation for an AR(p) Process, this is typical for a time series derived from an autoregressive process. Note too that we can use Property 3 of Autocorrelation Function to test whether the PACF values for lags 2 and beyond are statistically equal to zero (see Figure 3).

Figure 3 – Bartlett’s test for PACF

The test shows that PACF(2) is not significantly different from zero. Note that, where applicable, we can also use Property 4 and 5 of Autocorrelation Function to test PACF values.

Worksheet Functions

Real Statistics Functions: The Real Statistics Resource Pack supplies the following function where R1 is a column array or range containing time series data:

PACF(R1, k) – the PACF value at lag k

Real Statistics also provides the following array functions:

ACOV(R1, k) – the autcovariance matrix at lag k

ACORR(R1, k) – the autcorrelation matrix at lag k

Non-negative definite

Property 1: The autocovariance matrix is non-negative definite (See Positive Definite Matrices)

Proof: Let Y = [y1 y2 … yk]T and let Γ be the k × k autocovariance matrix Γ = [vij] where vij = γ|i-j| . As we observed previously, Γ = E[ZZT].

Now let X be any k × 1 vector. Then

which completes the proof.

Examples Workbook

Click here to download the Excel workbook with the examples described on this webpage.

References

Greene, W. H. (2002) Econometric analysis. 5th Ed. Prentice-Hall https://www.ctanujit.org/uploads/2/5/3/9/25393293/_econometric_analysis_by_greence.pdf

Gujarati, D. & Porter, D. (2009) Basic econometrics. 5th Ed. McGraw Hill

http://www.uop.edu.pk/ocontents/gujarati_book.pdf

Hamilton, J. D. (1994) Time series analysis. Princeton University Press

https://press.princeton.edu/books/hardcover/9780691042893/time-series-analysis

Wooldridge, J. M. (2009) Introductory econometrics, a modern approach. 5th Ed. South-Western, Cegage Learning

https://cbpbu.ac.in/userfiles/file/2020/STUDY_MAT/ECO/2.pdf

Charles,

Are we able to download the spreadsheet you used for the calculations above?

Cheers

Matt

Yes Matt,

See Real Statistics Examples Workbooks

Charles

partial autocorrelation based on your formula does not coincide with values calculated by regression and by matlab, so there is something wrong, for example partial autocorrelation coefficient for 4 lag is equal to -0.014080887922827, not -.06685

Dear Charles Zaiontz,

In Definition 1, you mentioned For k > 0 …….

However, in Example 1 you used a 4×4 matrix which is started from lag 0 to lag 3 multiply with a 4×1 matrix which is started from lag 1 to lag 4.

By reading the definition, it seems that is should not included lag 0 right?

A bit confused about it.

Thank You

Jason

Jason,

Definition 1 also states that the PACF = 1 when k = 0.

Charles