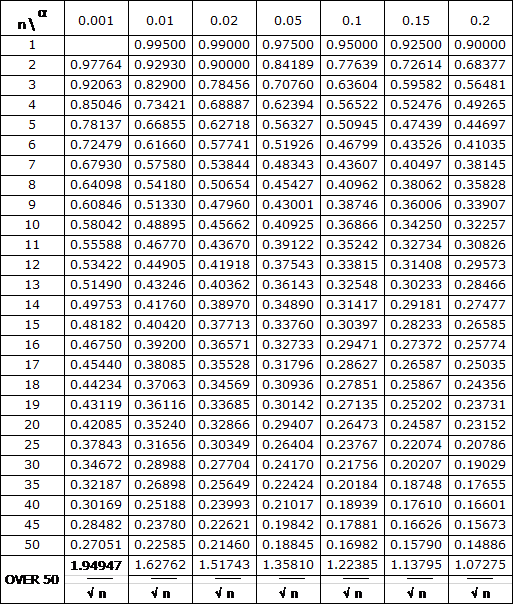

The following table gives the critical values Dn,α as described in Kolmogorov-Smirnov Test.

Download Table

Click here to download the Excel workbook with the above table.

References

Kanji, G. K. (2006) 100 Statistical tests. 3rd Ed. SAGE

https://uk.sagepub.com/en-gb/eur/100-statistical-tests/book229436

Lee, P. M. (2005) Statistical tables. University of York

https://www.york.ac.uk/depts/maths/tables/

Zar. J. H. (2010) Biostatistical analysis 5th Ed. Pearson

https://www.pearson.com/us/higher-education/program/Zar-Biostatistical-Analysis-5th-Edition/PGM263783.html

Hi, I want to know K-S distribution value for 32 sample size. Kindly help me

Hello Kabita,

You need to interpolate between the values for 30 and 35. The value you are looking for is roughly halfway between the values at 30 and 35. For a more exact value, you can use the approach described at

https://real-statistics.com/statistics-tables/interpolation/

Or you can use the KSCRIT worksheet function described at

https://real-statistics.com/non-parametric-tests/goodness-of-fit-tests/one-sample-kolmogorov-smirnov-test/

Charles

0.2280411

If the data is bimodal in nature, how should we find out the p-value?

You find the p-value in the same manner as for unimodal data. Whether the KS test is appropriate depends on what you are trying to use the test for. If you are testing for normality, then either the data is only marginally bimodal or you shouldn’t even bother to test the data since the normal distribution is not bimodal. In any case, I would use a different test for normality (usually Shapiro-Wilk), since the KS test is not the best.

Charles

Hi Charles,

Thanks for sharing the information.

I have a doubt.

The test statistic value of the KS test is known to me. I need to determine the p-value of the KS test. I am trying to apply the KSPROB function, but I couldn’t find the function in excel. Please provide me a solution. I will be really grateful to you.

The KSPROB function only works when you use the Real Statistics add-in to Excel. You can download the add-in for free from

https://www.real-statistics.com/free-download/real-statistics-resource-pack/

Actually, the KSDIST function will return more accurate results since it calculates the p-value directly without doing a table lookup.

If you don’t have the Real Statistics add-in installed, then you can calculate an approximate p-value by interpolating between alpha values in the KS table (which is what KSPROB does for you automatically).

Charles

Thanks for the reply.

I downloaded the Real Statistics add-in but still, I am unable to find a correct answer. Can you please elaborate on interpolation between alpha values?

Assuming that the Real Statistics add-in is installed, then you should be able to use the KSINV to find the critical value. It wlll do the interpolation for you. Better yet, just use the KSTEST function, as described at

https://www.real-statistics.com/tests-normality-and-symmetry/statistical-tests-normality-symmetry/lilliefors-test-normality/real-statistics-ks-test-for-normality/

Charles

This document shows a method to calculate the CV of two samples by KS test.

http://www.stats.ox.ac.uk/~massa/Lecture%2013.pdf

Hello Lakshmi,

By CV, do you mean critical value or something else?

Charles

Yes

Lakshmi,

Thanks for sharing the reference with all of us.

For smaller samples, the critical values shown in the table at the following webpage are more accurate:

https://www.real-statistics.com/statistics-tables/two-sample-kolmogorov-smirnov-table/

For larger samples, the critical value calculated on the following webpage is equivalent to the critical value shown on the webpage that you referenced [note that 1/m + 1/n = (m+n)/(mn)], except the multiplier c(.05) = 1.3581 value is a little more accurate than 1.36.

Charles

Hi Charles

As stated in many literature that I read, when parameters are determined from the data, then the critical value is invalid for KS test. Hence, it is required to perform Monte Carlo simulations to find critical values. Since this values are not available in literature, can you suggest a way to find them? I would like to test goodness of fit test for one data for generalized extreme value, generalized logistics and Pearson type 3 distributions.

Thanks!

Hi Estif,

The goodness of fit can be tested using the Anderson-Darling test for a variety of distributions. See

https://www.real-statistics.com/non-parametric-tests/goodness-of-fit-tests/anderson-darling-test/

This includes the logistic distribution.

Some references for the goodness of fit for the three distributions are as follows:

Generalized logistic distribution

https://jsdajournal.springeropen.com/articles/10.1186/s40488-020-00107-8

Generalized extreme value distribution

https://www.jstor.org/stable/2345336?seq=1

Pearson type 3 distribution

https://agupubs.onlinelibrary.wiley.com/doi/abs/10.1029/91WR02116

Charles

Hi Charles,

At the table above should the critical value be changed for a one or two-tailed test? how?

Thank you

Jose,

I believe that the table is for a two-tailed test. The one-tailed test is described in the following article

https://stats.stackexchange.com/questions/107668/does-it-make-sense-to-perform-a-one-tailed-kolmogorov-smirnov-test

An approximate version of the one-tailed test is obtained by doubling the alpha value and using the table on the website.

The following paper may also be useful:

https://web.iitd.ac.in/~amittal/SBL701_KS_test_Tables.pdf

This paper also gives a reference where you can get the one-tailed table of critical values (see bottom of page 139), although it is for the two-sample test.

The following paper is supposed to describe the critical values for the one-tailed test for the two-sample KS test

https://www.jstor.org/stable/2285616?seq=1

Charles

Hi Charles,

I am examining whether two sets of samples are drawn from the same population, neither is expected to have a normal distribution.

I set everything up to do the Two-sample KS test, which worked fine – but then realised that since one sample has a few hundred samples (n) and the other has tens of thousands (m), my Dmn is always going to be a tiny number and the null hypothesis is uniformly rejected.

Is there a more appropriate non-parametric test I should be using for my situation?

Thanks,

J

Jay,

You might consider using the Two-sample Anderson-Darling test, although it may have the same problem.

Charles

n is 46 and nothing in the table above how can I find the critical value?

Mehak,

You need to interpolate between the 45 and 50 values in the table. See the following webpage for how to interpolate:

https://real-statistics.com/statistics-tables/interpolation/

Alternatively, you can use the KSCRIT or KSINV function provided by the Real Statistics software to find this value. See

https://real-statistics.com/tests-normality-and-symmetry/statistical-tests-normality-symmetry/kolmogorov-smirnov-test/

https://real-statistics.com/tests-normality-and-symmetry/statistical-tests-normality-symmetry/kolmogorov-smirnov-test/kolmogorov-distribution/

Charles

Dear Charles,

Regarding the K-S test, I am a little bit confused about the interpretation.

My understanding is that the D parameter (sup(F(x)-F0(x))) should be as small as possible to have the theoretical distribution fitting the sample.

I also understand that if D<Dn,a, then the theoretical distribution fits the sample.

However, in that frame, I would expect Dn,a being smaller when a get smaller. Could you please explain ?

Kind regards,

Michel.

Hello Michel,

The reason is that this test uses the right tail. See

https://real-statistics.com/tests-normality-and-symmetry/statistical-tests-normality-symmetry/kolmogorov-smirnov-test/

Charles

Hi Charles. How to calculate p-value by table? Let n=7 and D=0.234.

See Kolmogorov-Smirnov Test

KS Test for Normality

Charles

Houssein,

You need to find the critical value in the table for n = 7 that is closest to D. In your case, D is less than any of the table vales for n = 7, and so the p-value is greater than .20. The table doesn’t give enough information to be more precise than this.

If you use the Real Statistics =KSDIST(.234,7) formula you will get an approximate p-value of .78.

Charles

Hi.

Thank you.

Is there a command in r to calculate P-value from D and sample size?

Hello Hossein,

I don’t use R and so I am not able to give you an answer.

You can do this using Real Statistics

Charles

Thank you for reply.

I would like to know how to get to o.78 without software. Is extrapolation right?

You might be able to use extrapolation, but that is not the process that I used. The process I used is explained at

Kolmogorov Distribution

Charles

Hi,

Do you know how this p-value is calculated, given D?

Ed

Ed,

The table shows critical values. To get the p-values see

https://real-statistics.com/tests-normality-and-symmetry/statistical-tests-normality-symmetry/kolmogorov-smirnov-test/

https://real-statistics.com/tests-normality-and-symmetry/statistical-tests-normality-symmetry/kolmogorov-smirnov-test/kolmogorov-distribution/

Charles

Dear Charles

Your site is indeed instructional and very helpful in assessing and inferring on data. My request was, can you show how nested logit model can be done in excel.

Best

Hello Rajesh,

I have not looked into this issue yet. I will add this to the list of possible future topics.

Charles

Dear Charles Zaiontz,

thank you for this helpful page. My question is: Do you know of an effect size measure (in the sense of Cohen, 1988) for the K-S-test – and ultimately, do you have a hint for calculating the optimal sample size for this test given alpha + beta?

Thank you very much,

Robert

Hello Robert,

Here are some websites that might be helpful.

Effect size: see

https://stats.stackexchange.com/questions/7771/how-do-i-calculate-the-effect-size-for-the-kolmogorov-smirnov-z-statistic

https://garstats.wordpress.com/2016/05/02/robust-effect-sizes-for-2-independent-groups/

Sample size: see

https://www.cna.org/CNA_files/PDF/DOP-2016-U-014638-Final.pdf

Charles

Charles,

thank you for your very fast reply! So that’s interesting, there is no ‘straightforward’ concept to power for the K-S (how come?). Thank you for the links, they showed me how to approach my problem!

Hello Robert,

Glad I could help.

There doesn’t seem to be a straightforward approach to power for any of the non-parameteric tests.

Charles

i’m sorry, i dont understant how can i find the critical value for n=120 ? thank you very much before

Since n = 120 > 50, the critical value is 1.3581 / square-root of 120 = .123977.

Charles

my sample number is 43 and nothing in the table above how can I find the critical value?

Wiwik,

You need to interpolate between the two values that are in the table, namely between 40 and 45. You can also use the Real Statistics KSCRIT function which does the interpolation for you. In particular, for alpha = .05

=KSCRIT(43) yields the value .202955

Charles

Thank you verry much.. Charles…

Hope all the best for you…

You get greeting for Indonesia

Hi, I am trying to calculate the critical D in a two sample KS test but I am facing some difficulties.

My populations have different sizes: e.g. 60 and 80. I have seen that the formula for D-crit for the 0.05 level is:

Dm,n,α = KINV(α)*SQRT((m+n)/(m*n))

So I would assume that in my case D-crit = 1.35810 * SQRT((60+80)/(60*80))?

Based on the table though the critical value is

D-crit = 1.35810 / sqrt (n)

I do not understand whether my KINV(a) is 1.36 or it should be 1.35810/sqrt(n)

Could you please help me? Sorry if this is too obvious.

Thank you in advance

Vasiliki

Hello Vasiliki,

Yes, KINV(.05) = 1.3510 (this is a more precise estimate than 1.36). As you have figured out, n = 80*60/140 = 34.28571

Thus, D-crit = 1.3510/sqrt(34.28571) = .231939.

You can get the same result by using the Real Statistics formula =KS2CRIT(60,80,0.05,2)

Charles

HI, WHERE CAN L GET THE CRITICAL VALUES FOR KS TEST & IS THIS TEST GOOD FOR A SAMPLE SIZE OF 219.

Mike,

See the response I just sent you.

Charles

Good day, l am testing my data for normality using kolmogorov-Smirnov test. Now l have managed to calculate the maximum value and my sample size is 219. Please advice on how l can get the cretical values now? can l generate them using excel or l have to get them from some where, if yes where?

Mike,

The critical value shown in the table for alpha = .05 is 1.3581/sqrt(219). However, if you are estimating the mean and the variance from the sample, you should use the Lilliefors version of the KS test. This is equivalent to the KS test except that it uses the table at

Lilliefors Test Table.

For more information (and Excel formulas for conducting this test) see the following webpages:

Kolmogorov-Smirnov Test for Normality

Lilliefors Test for Normality

Charles

Thank you very much so helpful

Can use normality test for 3 sample?

Yes.

WHAT IS THE MAXIUM SAMPLE SIZE FOR KS-TEST AND WERE CAN WE GET THE CRITIAL VALUES

Dear Charles,

Thank you for sharing!

I have a question: it is not clear to me how critical values are obtained for n over 50? is it alpha/sqrt(n)? I tried this formula and compare it to what obtained from Easyfit but the values are totally different… Do you have any guidance?

BR

No, it is the value shown in the table divided by the square root of n. E.g. when alpha is .05, then the table value is 1.3581/sqrt(n).

Charles

Oh sorry, I was seeing the nominator values as a row in the table. Thank you for your reply!

Btissam

Hello Charles,

I use the K-S test to test differences in the distributions of electrophysiological data, so I often end up having quite a high n. Up to what n value can I consider the K-S analysis reliable? Your table goes up to n=50, but I often have between 120 and 300 values. Is the test still reliable with this sample size?

Thank you very much in advance!

Ilkin,

The more data the better. The test will still work for values of n beyond 50.

Charles

“Charles,

Yes, I agree – so if the sample K-S statistics is critical D at 0.05, so we must then accept the null hypothesis at the 0.01 level, and then at the 0.01 level, etc.

Put another way, would we reject the null hypothesis at the 0.05 level but accept it at the 0.01 level if the the sample,K-S statistic lay between these values (ie D.01 > K-S statistic > D.05)? Robert” I’ve a same problem too.

My alpha 0.2 is more restrict than 0,01. Why?

The critical region for alpha = .01 is smaller than the critical region for alpha = .05. You could reject the null hypothesis at the .05 level, but retain it at the .01 level.

Charles

Sir,

How to estimate the mean and standard deviation from the sample

I would like to learn if I could use K-S test for normality, for big figures like 1.4 E10, 2.04 E09, 4.6E09 (Estimated bacteria amount in milk etc.) without taking log.

Alberto,

You can probably use the KS test for normality, but in general I suggest that you use Shapiro-Wilk test.If you do use the KS test and estimate the mean and standard deviation from the sample, then you should use the Lilliefors table.

Charles

Sir charles? How can we identify the critical value in the kolmogorov smornov test

Please.

Eliza,

This is covered on the website. I suggest that you type Kolmogorov into the Search bar (under the topic menu on the right side of any webpage) to see how the critical value of the KS test is used.

Charles

My observed value Dn=0.0462, n=100. How to calculate p-value? Thanks in advance.

You can use the KSPROB function as described on the following webpage:

https://real-statistics.com/tests-normality-and-symmetry/statistical-tests-normality-symmetry/kolmogorov-smirnov-test/

Charles

How can we “accept” the null hypothesis H0? We fail to reject the alternative,

not less, not more. The NHST was not constructed to accept whatever H0 or Ha.

Think that the procedure is completely unfair to Ha, in fact we not reject it

unless the pvalue is less than 1-alpha, that is 0.05 , 0.01.

So there are a lot of cases that p>0.05 (say) notwithstanding H0 is untrue.

“Accepted?”.

Bibliography

https://statistics.laerd.com/…/hypothesis-testing-3.ph…

. . . shows that the significance level is below the cut-off value we have set (e.g., either 0.05 or 0.01), we reject the null hypothesis and accept the alternative hypothesis. Alternatively, if the significance level is above the cut-off value, we fail to reject the null hypothesis and cannot accept the alternative hypothesis. You should note that you cannot accept the null hypothesis, but only find evidence against it.

The numbers that you have reported for D_n is in contrast with the values at the wiki page :

https://en.wikipedia.org/wiki/Kolmogorov%E2%80%93Smirnov_test

Look at the “Two-sample Kolmogorov–Smirnov test” at the wiki page, for two samples of different sizes n and n’, If one defines n=n’, she will get D_n bigger than what you have reported for n over 50 in this page…with a factor of sqrt(2). which one is correct?

The Wikipedia page that you reference provides the exact same values as the one sample KS table on my site. In any case, the Wikipedia table is referring to the two sample test whereas as the referenced table on my site is for the one sample test. I describe the two sample KS test on the following webpage

Two Sample Kolmogorov-Smirnov Test

Charles

Thank you so much for your quick response. That was so helpful.

Sorry….for n25..alpha o.o5=0.264

For n25 alpha 0.05 is 0.264….

Charles,

In the Kolmogorov-Smirnov table, the critical value of D increases as alpha (1-P) decreases for a given N. This would imply that if a sample K-S statistic is < the critical D value at say the .05 level, then it must also be < the critical D value at the .01 level. This does not seem logical to me – what am I missing?

Robert

Robert,

We reject the null hypothesis that the data comes from a specific distribution when the sample K-S statistic > the critical value (not less than it).

Charles

Charles,

Yes, I agree – so if the sample K-S statistics is critical D at 0.05, so we must then accept the null hypothesis at the 0.01 level, and then at the 0.01 level, etc.

Put another way, would we reject the null hypothesis at the 0.05 level but accept it at the 0.01 level if the the sample,K-S statistic lay between these values (ie D.01 > K-S statistic > D.05)? Robert

Charles – sorry, “>” was missing on the first line; should read “… K-S statistic is > critical D …then accept H0 at the 0.01 level…”. Apologies, Robert

I meant “<" of course – I'm confusing myself! Robert

Robert,

If you reject a null hypothesis at the 1% level, then clearly you reject it also at the 5% level. Thus if you retain the null hypothesis at the 5% level you also retain it at the 1% level.

Charles

Charlies – thank you for your patience, Robert

Hi Charles, how i get the Kolmogorov-Smirnov Table for two samples?

Adrian,

I have found a few versions of the two-sample KS table, but they are all different, and so I have been reluctant to put one of these on the website. For this reason I provided a distribution function to calculate these values, as described on the webpage

Two Sample Kolmogorov-Smirnov Test.

Charles

Thank you for your help.