Spectral Decomposition Theorem

Property 1: Let A be a symmetric n×n matrix, then A has a spectral decomposition A = CDCT where C is an n×n matrix whose columns are unit eigenvectors C1, …, Cn corresponding to the eigenvalues λ1, …, λn of A and D is the n×n diagonal matrix whose main diagonal consists of λ1, …, λn.

Proof: We prove that every symmetric n×n matrix is orthogonally diagonalizable by induction on n. The property is clearly true for n = 1. We assume that it is true for any n × n symmetric matrix and show that it is true for an n+1 × n+1 symmetric matrix A.

Let λ be any eigenvalue of A (we know by Property 1 of Symmetric Matrices that A has n+1 real eigenvalues) and let X be a unit eigenvector corresponding to λ. Thus AX = λX, and so XTAX = XTλX = λ(XTX) = λ(X ∙ X) = λ, showing that λ = XTAX.

By Property 3 of Linear Independent Vectors, we can construct a basis for the set of all n+1 × 1 column vectors which includes X, and so using Theorem 1 of Orthogonal Vectors and Matrices (Gram-Schmidt), we can construct an orthonormal basis for the set of n+1 × 1 column vectors which includes X. Now define B to be the matrix whose columns are the vectors in this basis excluding X. By Property 4 of Orthogonal Vectors and Matrices, B is an n+1 × n orthogonal matrix.

Note that (BTAB)T = BTATBT = BTAB since A is symmetric. This shows that BTAB is a symmetric n × n matrix, and so by the induction hypothesis, there is an n × n diagonal matrix E whose main diagonal consists of the eigenvalues of BTAB and an orthogonal n × n matrix P such BTAB = PEPT. Now define the n+1 × n matrix Q = BP. Note that by Property 5 of Orthogonal Vectors and Matrices Q is orthogonal.

Now define the n+1 × n+1 matrix C whose first row is X and whose remaining rows are those of Q, i.e. C = [X, Q]. We now show that C is orthogonal. First we note that since X is a unit vector, XTX = X ∙ X = 1. Since the columns of B along with X are orthogonal, XTBj= X ∙ Bj = 0 for any column Bj in B, and so XTB = 0, as well as BTX = (XTB)T = 0. Finally since Q is orthogonal, QTQ = I.

Now

This completes the proof that C is orthogonal. We next show that QTAQ = E.

Next we need to show that QTAX = XTAQ = 0. Since

since A is symmetric, it is sufficient to show that QTAX = 0.

As we saw above, BTX = 0. Also, since λ is an eigenvalue corresponding to X, AX = λX. Thus,

Now

Defining

![]() it follows that CTAC = D, and so

it follows that CTAC = D, and so

Note that at each stage of the induction, the next item on the main diagonal matrix of D is an eigenvalue of A and the next column in C is the corresponding eigenvector and that this eigenvector is orthogonal to all the other columns in C.

Observation: The spectral decomposition can also be expressed as A = .

Example

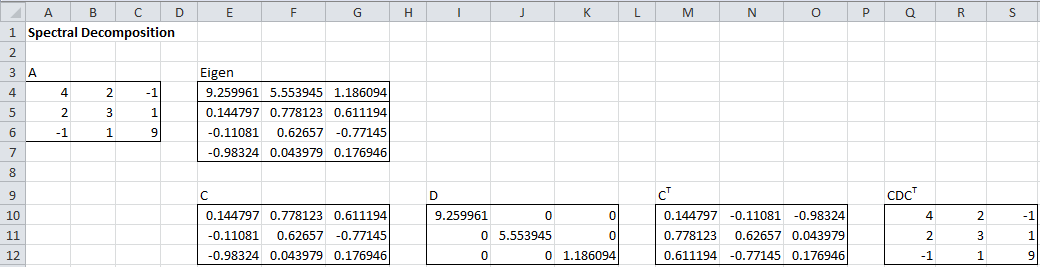

Example 1: Find the spectral decomposition of the matrix A in range A4:C6 of Figure 1.

Figure 1 – Spectral Decomposition

We calculate the eigenvalues/vectors of A (range E4:G7) using the supplemental function eVECTORS(A4:C6). Matrix C (range E10:G12) consists of the eigenvectors of A and matrix D (range I10:K12) consists of the square roots of the eigenvalues. You can check that A = CDCT using the array formula

=MMULT(E10:G12,MMULT(I10:K12,M10:O12))

Worksheet Function

Real Statistics Function: The Real Statistics Resource Pack provides the following function:

SPECTRAL(R1, iter): returns a 2n × n range whose top half is the matrix C and whose lower half is the matrix D in the spectral decomposition of CDCT of A where A is the matrix of values in range R1.

Here iter is the number of iterations in the algorithm used to compute the spectral decomposition (default 100).

Data Analysis Tool

Real Statistics Data Analysis Tool: The Spectral Factorization option of the Real Statistics Matrix Operations data analysis tool also provides the means to output the spectral decomposition of a symmetric matrix.

See Matrix Operations Tool for more details.

Observation

As we have mentioned previously, for an n × n matrix A, det(A – λI) is an nth degree polynomial of form (-1)n (x – λi) where λ1, …., λn are the eigenvalues of A. If all the eigenvalues are distinct then we have a simpler proof for Property 1 (see Property 4 of Symmetric Matrices). But as we observed in Symmetric Matrices, not all symmetric matrices have distinct eigenvalues. This motivates the following definition.

Multiplicity

Definition 1: The (algebraic) multiplicity of an eigenvalue is the number of times that eigenvalue appears in the factorization (-1)n (x – λi) of det(A – λI).

Property 2: For any eigenvalue λ of a square matrix, the number of independent eigenvectors corresponding to λ is at most the multiplicity of λ.

Proof: Suppose λ1 is an eigenvalue of the n × n matrix A and that B1, …, Bk are k independent eigenvectors corresponding to λ1. By Property 3 of Linear Independent Vectors, there are vectors Bk+1, …, Bn such that B1, …, Bn is a basis for the set of n × 1 vectors. Now let B be the n × n matrix whose columns are B1, …, Bn. Since B1, …, Bn are independent, rank(B) = n and so B is invertible. By Property 9 of Eigenvalues and Eigenvectors we know that B-1AB and A have the same eigenvalues, and in fact, they have the same characteristic polynomial.

Now consider AB. The first k columns take the form AB1, …, ABk, but since B1, …, Bk are eigenvectors corresponding to λ1, the first k columns are λB1, …, λBk. It now follows that the first k columns of B–1AB consist of the vectors of the form D1, …, Dk where Dj consists of λ1 in row j and zeros elsewhere. This means that the characteristic polynomial of B–1AB has a factor of at least (λ – λ1)k, i.e. the multiplicity of B–1AB, and therefore A, is at least k.

Property 3: For each eigenvalue λ of a symmetric matrix there are k independent (real) eigenvectors where k equals the multiplicity of λ, and there are no more than k such eigenvectors.

Proof: By Property 1, any symmetric n×n matrix A has n orthonormal eigenvectors corresponding to its n eigenvalues. By Property 2 of Orthogonal Vectors and Matrices, these eigenvectors are independent. This shows that the number of independent eigenvectors corresponding to λ is at least equal to the multiplicity of λ. But by Property 5 of Symmetric Matrices, it can’t be greater than the multiplicity of λ, and so we conclude that it is equal to the multiplicity of λ.

By Property 1 of Symmetric Matrices, all the eigenvalues are real and so we can assume that all the eigenvectors are real too.

Examples Workbook

Click here to download the Excel workbook with the examples described on this webpage.

References

Golub, G. H., Van Loan, C. F. (1996) Matrix computations. 3rd ed. Johns Hopkins University Press

Searle, S. R. (1982) Matrix algebra useful for statistics. Wiley

Perry, W. L. (1988) Elementary linear algebra. McGraw-Hill

Fasshauer, G. (2015) Linear algebra.

https://math.iit.edu/~fass/532_handouts.html

Lambers, J. (2010) Numerical linear algebra

https://www.yumpu.com/en/document/view/41276350

Hi Charles, sorry, just discovered SVD functions in Real Statistics! Please ignore point 2, though point 1 still interests me. Thank you.

Hello Branko,

Sorry for the delayed response.

When I changed the default order of the eigenvalues in the eVALUES and eigVALSym functions, I inadvertently introduced an error to the Spectral decomposition capability.

I will fix this in the next release, which I hope to issue shortly.

Thank you for identifying this problem. I appreciate your help.

Charles

Hi Charles, two questions:

1) In the example workbook matrix D when calculated using =DIAGONAL() function goes in the opposite direction of the matrix D calculated using =DIAGONAL(SUBMATRIX(…)) function. Please explain.

2) How to use =SPECTRAL() function, or any other, to get the matrices U, Σ and V^T, where X=U Σ V^T (I used V^T as a symbol for V transposed). These are the three matrices used for Singular Value Decomposition (SVD). Could this be achieved via the eigenvalues and eigenvectors? Thanks.

Hi Charles, is there any procedure to compute eigen values and vectors manually in Excel?

You can use the approach described at

https://real-statistics.com/matrices-and-iterative-procedures/goal-seeking-and-solver/

You can also use the Real Statistics approach as described at

https://real-statistics.com/linear-algebra-matrix-topics/eigenvalues-eigenvectors/

Charles

if 2 by 2 matrix is solved to find eigen value it will give one value it possible

Sorry Naeem, but I don’t understand your comment. Are you looking for one value only or are you only getting one value instead of two?

Charles

Thanks a lot sir for your help regarding my problem. Keep it up sir.

You are doing a great job sir.

With regards

Tapan

For spectral decomposition As given at Figure 1

By taking the A matrix=[4 2 -1

2 3 1

-1 1 9],

when i am trying to find Eigen value and corresponding Eigen Vector by using eVECTORS(A).

I am only getting only one Eigen value 9.259961. How to get the three Eigen value and Eigen Vectors.

You need to highlight the range E4:G7 insert the formula =eVECTORS(A4:C6) and then press Ctrl-Shift-Enter. Since eVECTORS is an array function you need to press Ctrl-Shift-Enter and not simply Enter.

Charles