We now turn our attention to modeling the case where we presume that the variables are independent. This is the situation we studied when using the chi-square distribution to test two-way contingency tables in Independence Testing.

Example

Example 1: Create an independence log-linear model for the data in Example 2 of Independence Testing.

We repeat the analysis we did for Example 1 of Saturated Model, using the same coding for the categorical variables:

t1 = 1 if therapy 1 and = -1 if therapy 2

t2 = 1 if cured and = -1 if not cured

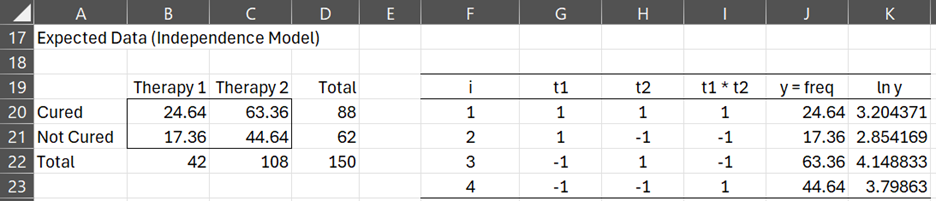

except that this time we use the contingency table with expected values (as calculated in Example 2 of Independence Testing) based on the assumption that the variables are independent (see Figure 1).

Figure 1 – Fitting the data to the independence model

Using the same approach that we employed in Example 1 of Saturated Model, we calculate the coefficients to obtain:

b0 = 3.502

b1 = -0.472

b2 = 0.175

b3 = 0

That the interaction coefficient b3 = 0 is not surprising since the assumption that we made when creating the table of expectations is that the variables are independent. Using these coefficients we now can express the results from the chi-square analysis via the following log-linear regression model:

From this model we see that if t1 = 1 and t2= -1 then ln y = 3.501 – 0.472 + 0.175(-1) = 2.854 and so y = e2.854 = 17.36, which simply says the expected value for Not Cured in Therapy 1 is 17.36.

Summary of Results

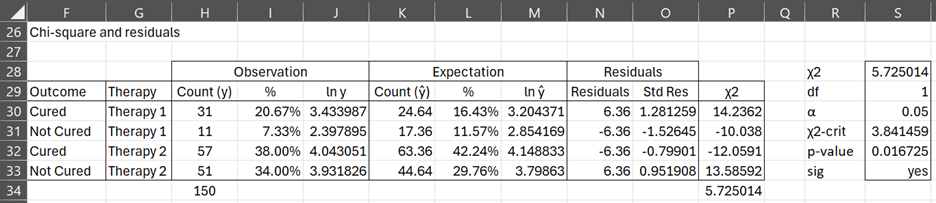

A summary of the results is given in Figure 2.

Figure 2 – Residuals and chi-square statistic

Here the residuals are simply Obs – Exp and the standard residuals are the residuals divided by the standard error which is . The standardized residuals

are approximately normally distributed and so values of whose absolute value is in excess of 1.96 are cause for concern, although since a number of tests are being conducted a Bonferroni correction needs to be used for such tests.

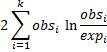

The χ2 values in Figure 2 are calculated using the maximum likelihood statistic (see Definition 1 of Goodness of Fit):

Thus the chi-square statistic is the sum of the four chi-square values, i.e. 5.725. As we did in Independence Testing, since the p-value for a chi-square statistic of 5.725 with 1 degree of freedom (for a 2 × 2 table) is 0.0167 < .05 = α, we conclude there is a significant correlation between the Cure and Therapy variables, i.e. that the independence model is not a good fit for the data.

Observations

We could have also used the ordinary Pearson’s chi-square statistic, but the maximum likelihood statistic is more commonly used.

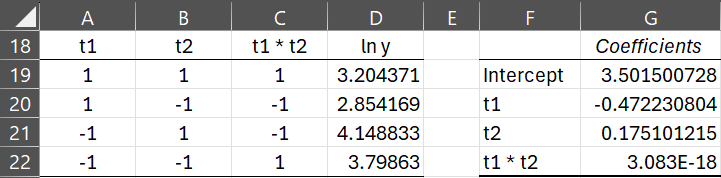

We can also use Excel’s regression data analysis tool to calculate the log-linear coefficients. Using the above coding for the categorical variables the regression data analysis tool calculates the following values of the coefficients (on the right of Figure 3).

Figure 3 – Data analysis tool to calculate the regression coefficients

These agree with the values we have seen previously. We need to ignore the rest of the output from the regression tool (not shown in Figure 3) since it is not very useful.

Examples Workbook

Click here to download the Excel workbook with the examples described on this webpage.

Reference

Howell, D. C. (2010) Statistical methods for psychology (7th ed.). Wadsworth, Cengage Learning.

https://labs.la.utexas.edu/gilden/files/2016/05/Statistics-Text.pdf