The following are desirable properties for statistics that estimate population parameters:

- Unbiased: on average the estimate should be equal to the population parameter, i.e. t is an unbiased estimator of the population parameter τ provided E[t] = τ.

- Consistent: the accuracy of the estimate should increase as the sample size increases

- Efficient: all things being equal we prefer an estimator with a smaller variance

Properties

Property 1: The sample mean is an unbiased estimator of the population mean

Proof: If we repeatedly take a random sample {x1, x2, …, xn} of size n from a population with mean µ, then the sample mean can be considered to be a random variable defined by

Since each of the xi is a random variable from the same population, E[xi] = μ for each i, from which it follows by Property 1 of Expectation that:

Property 2: If we repeatedly take a sample {x1, x2, …, xn} of size n from a population with variance σ2, then

Proof: Since the xi are independent with variance σ2, by Property 6.3b and 6.4b of Expectation

Small Populations

The proof of Property 2 depends on the sample members being selected (with replacement) independently of each other. This may not be the case where the sample is not a small fraction of the population. In this case, it can be shown that

where N = the size of the population.

Sample Variance

If we repeatedly take a sample {x1, x2, …, xn} of size n from a population, then the variance s2 of the sample (see Measures of Variability) is a random variable defined by

Property 3: The sample variance is an unbiased estimator of the population variance.

Click here for a proof of Property 3.

Biased and Unbiased Estimators

As stated above, t is an unbiased estimator of the population parameter τ provided E[t] = τ. We make the following definitions.

The bias of an estimator t of τ is Bias(t) = E[t] – τ. An estimator is unbiased if its bias is zero.

The mean squared error of the estimator is MSE(t) = E[(t–τ)2]. An estimator is efficient if this estimate has the smallest mean squared error.

Property 4: MSE = Var + Bias2

Proof: By Property 2 of Expectation

Var(t – τ) = E[(t – τ)2] – (E[t – τ])2

= E[(t – τ)2] – (E[t] – τ)2 = MSE(t) – (Bias(t))2

Thus, an unbiased estimator is efficient if its mean square error is smaller than any other unbiased estimator.

Biased estimators

Property 5: The sample standard deviation s is a biased estimator of the population standard deviation σ. In fact, s has a negative bias.

Proof: By Property 3, the sample variance s2 is an unbiased estimator of the population variance σ2. By Property 2 of Expectation it then follows that

Var(s) = E[s2] – (E[s])2 = σ2 – (E[s])2

Assuming our sample has at least two elements, it follows that 0 < Var(s) = σ2 – (E[s])2, and so E[s] < σ.

We note, without proof, that

Property 6:

By Property 6, Var(s2) → 0 as n → ∞. It follows that s2 is a consistent estimator of σ2.

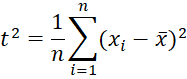

Property 7: Define t2 as

Then t2 is a biased estimate of σ2 with a negative bias.

Proof: Since t2 = (n-1)s2/n, it follows that

We see that the bias is –σ2/n.

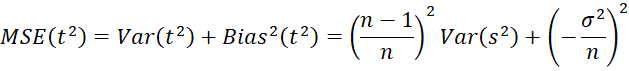

Observations: Note, though, that t2 is asymptotically unbiased since E[t2] → σ2 as n → ∞. Also note that

Since MSE(t2) → 0 as n → ∞, we conclude that t2 is a consistent estimator of σ2.

References

Wikipedia (2012) Estimator

https://en.wikipedia.org/wiki/Estimator

Howell, D. C. (2010) Statistical methods for psychology (7th ed.). Wadsworth, Cengage Learning.

https://labs.la.utexas.edu/gilden/files/2016/05/Statistics-Text.pdf

Wikipedia (2022) Bias of an estimator

https://en.wikipedia.org/wiki/Bias_of_an_estimator

Vaia (2024) Estimator bias

https://www.vaia.com/en-us/explanations/math/statistics/estimator-bias/